Introduction

The Instructional Design for eLearning Challenge highlights several features of the latest LodeStar 7.0 build. This article explores those features and exposes some of the design decisions made in constructing the challenge.

First, the topic of instructional design for eLearning is immensely broad. Complicating the field is the designer’s own philosophy of the nature and scope of knowledge. Nevertheless, we can assume an instructional designer’s vocabulary includes Benjamin Bloom’s taxonomy, Robert Gagne’s Events of Instruction, an understanding of key terms and practices in eLearning design, and an acquaintance with some of the theories that inform design. This challenge was organized after an attempt to survey the discourse between designers and capture some of the key terms, practices and even theories that are communicated in that discourse.

Digital Badges

In designing the challenge, I decided to award a digital badge to recognize a participant’s accomplishment in achieving 85% mastery of the content. I named this badge ‘eLearning Terms’ because all of the questions in the challenge can be categorized as declarative knowledge. Even the identification of a theory is no more than declarative knowledge. Therefore, the digital badge is named ‘eLearning Terms’ because it is nothing more than a test of one’s knowledge of terms that are used in the field of eLearning design.

For designing and hosting a badge, I had several options. One was to stage the badge issuing process following the Mozilla Open Badge Infrastructure. The second was to use a known badge issuer like Basno. Basno.com, for example, is the badge issuer for the completion of the Boston Marathon.

The Mozilla Open Badge Infrastructure requires LodeStar Learning to host the badge issuing process on its own servers, which we may very well do in the future. The Basno process was much easier to get up and running quickly. One creates a badge and enables participants to apply for the badge.

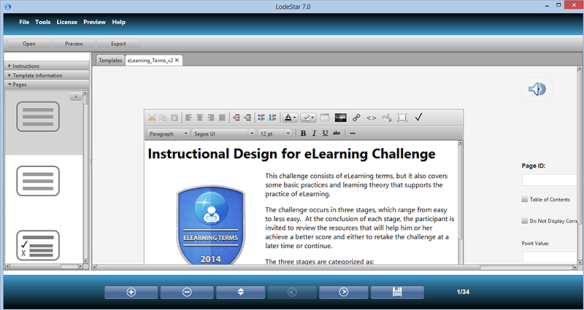

ActivityMaker Template

For this challenge, I decided to use the ActivityMaker template. An instructor authoring with LodeStar selects a template, converts it into a project, adds content, saves, previews and then exports the activity to a Learning Management System or anywhere on the web or in the cloud. I chose ActivityMaker because it is a Swiss army knife. In other words, at the time of this writing, I can use one template to incorporate many different types of activities. A list of activities that the ActivityMaker template supports includes the following:

- Text with imbedded questions and images

- Images

- Multiple Choice

- Multiple Select

- Matching

- Categorizing activity

- Short Answer, with regular expression support

- Branching at multiple levels, answers, page scores, and section scores

- Menu

- Long Answers (Open Answers)

- Interview Questions (for interactive scenarios and case studies)

- Video

- Flashcards

- Journals (Compiles all of the Open Answers into a one page report)

In addition, I can embed Web 2.0 content on any text page.

On the Use of Gates

The next decision was whether or not to create levels for the challenge with performance gates. LodeStar enables authors to create performance gates based on absolute points or percentages. Users who meet or exceed the performance score threshold, branch in one direction; users who fail to meet the threshold branch in a different direction.

For this challenge, I decided to create levels, but not gates. At the conclusion of each level, the participant sees his or her score and receives a list of resources that match missed items. At that point, the participant can stop the challenge and review the resources or continue on. At the conclusion of the challenge, the participant is branched to the success page, which provides badge information, or to the failure page.

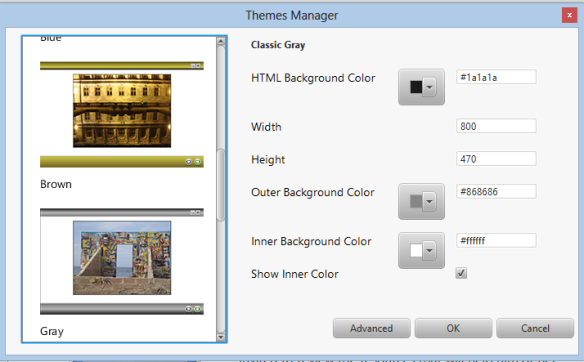

Themes

Before I got started inputting the questions, I decided on a theme. Starting with Build 22, LodeStar introduces new themes that can be totally configurable. If you are working on an older project, please apply a theme to the project. This will lead to faster downloads and an improved experience.

I also applied a transition effect to the project. The tool offers a variety of transition effects that govern the transitions between pages.

Answer Types (Page Layouts)

For the questions themselves, the authoring tool gives the option not to reveal the answers. I chose to reveal the answers. If I had chosen not to disclose the answers, I would have needed to redirect participants to resources where they can learn the answers in order to retake the challenge. In some contexts, this would have been preferable.

Now to the content.

The introduction is a Text page type.

The first question was constructed with the layout type of Question(Simple Layout). With this layout type, I could have chosen to provide unique feedback for every distractor (answer option). Instead, I used a new LodeStar feature, which is to offer page level feedback. If the question were answered incorrectly, the feedback would display a resource that would help the participant learn more about the term, practice or theory.

Regardless of the layout type, I assigned each question either 1, 2 or 5 points to match the level of difficulty or effort required to answer the question.

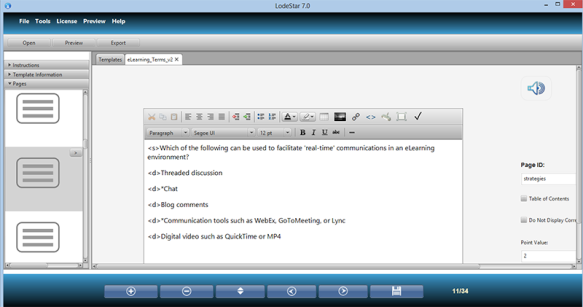

In Level Two, I created slightly more involved question types. I used the Text layout but added questions to the text page by using a simple mark-up.

The markup is <s> for question stem, the question itself.

<d> is for distractor, or answer option.

<d>*indicates the correct answer.

The following screenshot shows the markup in action. This allows for any number of distractors, and any content including images and mathematics mark-up using MathML.

To give participants an update on their score and to compile the corrective feedback in one place, I added report pages. You may notice, if you take the challenge, that each report page displays an error pop-up message. This message occurs when LodeStar attempts to send the score to the underlying Learning Management System. In this case, there is no Learning Management System. I could have chosen to make the error silent, but I wanted to display a LodeStar object at work trying to communicate the participant’s score.

In Level Three of the Challenge, I included some categorization and matching questions. To generate these, authors simply type in the question or the directions and then type in a term on the left and its match on the right. LodeStar then randomly rearranges the matches on the right.

Finally, we have the Gate that decides whether or not the participant gets the badge application information. If the participant scored 85% or above, the badge information displays. If not, the gate branches to an apologetic message.

In the screenshot below, we see that the Gate plays multiple roles.

From top to bottom: the instructor uses a Gate to decide whether or not the score is reset. The instructor, for example, can decide to branch the user to a remedial section, with a reset score so that the student can try again.

I didn’t reset the score.

Next, the Gate offers the option to enable the Back and Next buttons. The Gate will automatically disable the Back and Next buttons, unless the instructor explicitly allows them.

The Gate supports custom scores. The instructor can choose to track a certain category of questions and then branch based on a custom variable assigned to a group of questions. I could have chosen to assign a score to a custom variable called ‘Basic’ versus ‘Intermediate’ or ‘Advanced’. Level One questions could have appended a value to ‘Basic’. Level Two questions could have appended a value to ‘Intermediate’ and so on. At some point, I could have branched on the variable. But I didn’t.

Gates decide whether students pass or fail. If they pass, they follow the branch options spelled out in ‘Pass Message/Branch Options’. If they fail, they follow the branch options spelled out in ‘Fail Message/Branch Options’. The options are found under the blue ‘branch’ button.

The Gate also allows students to go down the Pass branch regardless of score.

In my case, I typed in ’85’, and checked off ‘Use Percentage’. This means that students will only follow the Pass branch, if they scored 85% or greater.

Finally, if I wanted to reset a custom variable, I would have typed in the name of the variable (case sensitive) in the field labeled ‘Reset comma-separated List of Variables’.

The branch options include the ability to display feedback and to branch or do the following:

No action

Go To Next Page

Go to Previous Page

Jump To Page

Open URL

Add Overlay

Set Value

Append Value

The last two options relate to the custom variables or scores to which I referred earlier.

Conclusion

In conclusion, the current build of LodeStar 7 allows me to select a nice theme and modify it, choose a page transition, choose from many different page types, report scores, and branch according to performance.

The current build also includes an improved audio player and the ability to randomize distractors (answer options).

So, we’re moving in the right direction. Support us. Let us hear from you. Make comments. Tell others.

Finally, take the challenge at http://lodestarlearning.com/samples/eLearning_Terms_v2/index.htm