Introduction

A nursing instructor is challenged to design an online course on standard classification systems. One could easily picture the course defining a classification system and describing its benefits. Students may be asked to compare and contrast how each system classifies problems, treatments and outcomes. The course may feature presentations, discussions, papers, a final exam – content items that are common in today’s online course.

Its easy to be complacent about this sort of design. After all, the information is clearly presented, and students respond well in the discussions, in their papers and on the final.

What more is needed?

The argument in favor of additional instructional components is difficult to make. Motivating videos may be hard to find or expensive to produce. Occasional checks for understanding in the form of multiple choice, multiple select and short answer questions require additional time and skill in using the learning management system or third-party tools. Interactive cases that feature stories and make the content come alive are even more time consuming and dependent on instructional and/or technical skills. In the case of standardized classification systems, cases may be a great way to ensure consistent use of the systems (i.e. Inter-rater reliability).

CC BY-SA 3.0 Nick Youngson

Designing and developing activities for your online course that effectively engage students with the content takes time and know-how. It is easy to be skeptical about their value. You have precious little time. And you may not be certain that it is worth the time and effort. As a higher ed instructor, it is likely that no one pays you for the extra time and effort. The motivation comes intrinsically from successful students and a job well done. Or should we just concede this area of development to the publishers? They obviously have the skill and resources and economies of scale.

I am hoping you will reject that thought. You already apply time and know-how when putting together an online course. You make dozens of decisions. You make decisions related to selecting and sequencing content, organizing content, deciding on wording and style, and choosing media.

But is this where we should draw the line and not attempt designing activities that sponge up time – without much evidence of return on investment. Or is the evidence there and we just don’t know it. What does the research tell us? Does it make a compelling case in favor of the extra effort?

Do we even know what is effective?

Instructional Designers were asked what learning activities they would build for each level of Bloom’s taxonomy. (Benjamin Bloom, as you’ll recall, categorized goals of the learning process in six levels, which included knowledge, comprehension, application, analysis, synthesis and evaluation.) Getting back to the instructional designers, there was remarkable consistency between them regardless of age and gender and other factors. For lower levels of the taxonomy like knowledge and comprehension, designers chose drill and practice, programmed tutorials, demonstrations, and simulations. For higher levels, they chose such interactions as problem solving labs and case studies. The research tells us that, at least, there is a common practice – but is there evidence for it?

Let’s survey the research to decide if instructional designers were even on the right track. Do interactions make a difference? We’ll examine learning interactions at the most elementary level and then climb higher and see what the research has to say about higher order activities.

What is a learning interaction?

An interaction in this context is characterized by the contact between students and the materials of study. The contact involves student responses to stimuli (multiple choice, multiple select questions), practice and feedback (puzzles and flashcards), branched instruction (decision making scenarios, interactive case studies), categorization (matching, sorting, and ordering activities) analysis (review of text with open feedback, underlining, circling), manipulation of inputs and outputs (simulations, controllable animations, digital lab experiments), finding information (WebQuests), solving problems (problem based learning scenarios), evaluating (decision-making) and creating (proposals, diagrams, digital drawings, code-writing). For the purposes of this post, we’re less interested in the passive reading of text, or the watching of and listening to media — although I’ll concede that a broader technical definition would include any activity that results in cognitive change such as the recall of prior knowledge and the acquisition of new knowledge.

Research — Buyer beware

To return to our research quest, I’ll admit that I often look to others for the interpretation of what is significant and meaningful. Reviewing research takes time and skill. Nevertheless, I am drawn to the analyses, the statistical methods, the inferences and the statement of results. Using educational research can be tricky. There are caveats. Single papers can offer us the wrong conclusions or may not be applicable to our situation. Compared to a drug study or a health-related study, sample sizes in educational research seem small and not generalizable.

Finding educational research that uses a control group is a helpful first step. A control group of students is separated from an experimental group in such a way that the thing being tested cannot influence the control group’s results. Finding such research can be a challenge. Here is the kind of statement we see in educational research.

On analysis, the comparison of the overall knowledge scores pre- and post-treatment showed a statistically significant increase from 8.8 to 11.6 (P< 0.001).

Educators claim victory because post test scores were better after a treatment than before. That begs the question, compared to what? Perhaps the treatment was the most inefficient and uneconomical means on the planet to raise those test scores.

A simple paired T-Test may be preferable, in comparison, when we look at the difference between a pre- and post-test for a experiment group compared to the difference between a pre- and post-test for the control group. For an explanation of T-Tests, please visit: http://blog.minitab.com/blog/adventures-in-statistics-2/understanding-t-tests:-1-sample,-2-sample,-and-paired-t-tests

If I am looking at the effectiveness of a particular treatment, I will look for research related to higher education and my discipline of interest where the treatment is represented by the independent variable. The dependent variable might be, for example, student performance on a test score, student satisfaction measured with a survey, or student time on task. Experiments with pre- and post-tests without control groups can be problematic. A classic example is that of Hermit the Bug.

In our shop, we often ask this question: Should we include an animated character to serve as a guide or aid. In a project that I worked on years ago, we developed an amusing little character who would zip around the screen and guide the student along the learning path. The character was fun. It livened up the presentations. But the decision was based on no research at all.

Research literature calls this the persona effect. A paper titled The Persona Effect: Affective Impact of Animated Pedagogical Agents concluded that their potential to increase learning effectiveness is significant.

The researchers surveyed students who rated Hermit The Bug’s entertainment value, helpfulness compared to a science teacher and so forth. Since this paper was published, 15 studies have been done. 9 showed no effect, 5 showed mixed results and only 1 showed an effect. (Do pedagogical agents make a difference to student motivation and learning? Steffi Heidiga,∗, Geraldine Clareboutb,1 a née Domagk, University of Erfurt)

To be fair, the research on Hermit the Bug cited the following benefit:

Because these agents can provide students with customized advice in response to their problem-solving activities, their potential to increase learning effectiveness is significant.

One wonders, ‘Is it the persona effect that is contributing to greater motivation and improved learning or is it the feedback that the bug provides?’ Feedback is feedback, even when it’s delivered by a bug.

I point this out to underscore the difficulty of research and the folly of relying on one study. There are, of course, other problems that people cite about educational research in particular. Examples include research that is not objective and has a hidden agenda; over-generalization from a research focused on a very specific context; and frequent lack of peer review.

Common sense often exposes the weakest research – which seeks to promote a philosophy or product or particular point of view.

This blog is an example. I have a vested interest in instructors choosing to design their own interactions – because I am the creator of an eLearning authoring tool. But in my defense, there are two critical points. First, I developed the authoring tool because of a belief that learning interactions make a positive difference. And, more importantly related to this post, I was quite prepared and open to research that reported on the insignificance and possible detriment of learning interactions. The truth is that learning how to create compelling and effective learning interactions — let alone creating them — takes time. It takes more time than we typically dedicate to this kind of training. If I were to convince instructors to make the investment, I had to be certain of its benefit.

In the 80s, Michael Moore described student to content interaction as “a defining characteristic of education” and “without it there cannot be education”. That’s not to say that student-to-student and student-to-instructor interactions aren’t important. Much has been written about them and there are many best practice examples. I value the blending of all three types of interactions in an online course – but my line of inquiry had me questioning the importance of student-to-content interactions, specifically, and investigating their importance.

The Research

I asked what interactions are important in an online environment and what level of development effort begins to produce diminishing results. I’ll cover the first part in this blog post and the second part in a future post.

One piece of research (mentioned in the previous journal entry) is described in a paper called Effects of Instructional Events in Computer-Based Instruction, conducted by a group from Arizona State University. In traditional curriculum and design programs taught in the 80s and 90s, Robert Gagne’s Conditions of Learning was gospel. He proposed nine essential element of instruction. Well what would happen if we removed any essential element from the nine? Would it make a difference? The researchers created six versions of a program: a full version without anything removed; one without statement of objectives; one without examples; one without practice and feedback; one without review; and a lean version that presented information only.

Think about this for a moment before looking on. Removing one of these things really made a difference. Which one? What was the result?

As it turns out, removing practice and feedback makes a difference. And that is reassuring. Many of our activities are designed to provide students with instant feedback. We provide information; elicit a response; and then provide feedback. That is worth the effort – provided that we can construct such an activity efficiently and economically.

In another research study (published in Journal of Educational Computing Research), Hector Garcia Rodicio investigated whether or not requiring students to answer a question made a difference. I’m referring to just the physical act of selecting a check box or radio button. In the treatment group, students were required to answer a question before getting feedback. In the control group, students received all of the same information, but they were not required to perform the physical act of selecting an answer.

Does having to answer make a difference?

Apparently it did. Richard Mayer (University of Santa Barbara) explains why. When students have to answer questions they actively select relevant segments of the material, mentally organize them, and integrated them with prior knowledge (Campbell & Mayer, 2009).

The action is not insignificant. Look at the results.

A table showing mean scores and standard deviations on questions that recalled prior knowledge, supported retention and aided transfer

Note the difference an interactive question makes on retention and transfer. M is the mean score. SD is the standard deviation – a measurement of variation in the scores. Given the standard deviation, we can conclude the difference of four points is significant.

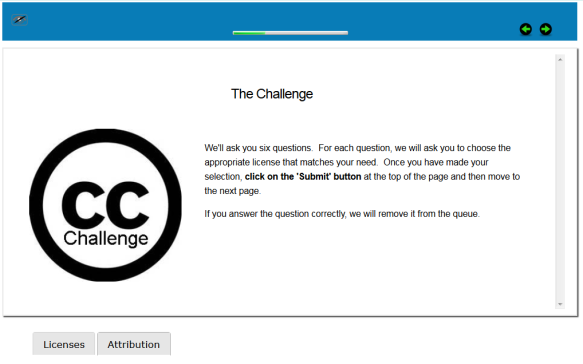

A LodeStar interaction that follows a presentation on Creative Commons licensing. Six questions check the learner’s understanding and ability to match the license to the requirement.

About the Meta-analysis

As mentioned there are significant pitfalls to education research. However, a particular type of analysis might provide us with more direction – the meta-analysis. The meta-analysis combines studies and typically includes many more students – many more samples – than the single study. But meta-analyses are not without their own issues. Because the meta-analysis is so common in educational research let’s explore them for a moment.

To start, the Merriam Webster definition of a meta-analysis is this:

A quantitative statistical analysis that is applied to separate but similar experiments of different and usually independent researchers and that involves pooling the data and using the pooled data to test the effectiveness of the results

A health study meta-analysis might involve dozens of studies involving thousands of individuals. The significance of a treatment is reported as an effect size. An effect size is the magnitude of an effect of an independent variable on a dependent variable in an experiment. Twenty studies, for example, might measure the effect of room noise on reading and test scores. Let’s hypothesize that lower room noise might lead to improved reading comprehension, which leads to better student performance on a quiz. If you pooled all of the studies together, can you conclude that lower room noise really makes a difference. What is the magnitude of this difference? In other words, does the meta-analysis show an overall significant effect size?

Wil Thalheimer and Samantha Cook have done a great job on simplifying the concept of an effect size. Because it is so prevalent in research and this blog entry is about research that can inform an instructor’s decision making, I will summarize it in simple terms.

The recipe for effect size goes something like this. You calculate the mean of the treatment condition and subtract from it the mean of the control group. In our example, we are looking at the performance scores of students who read in a quiet room versus a noisy room. We calculate the mean test score of students who read in a quiet room. We then calculate the mean test score of the students who read in a noisy room. We subtract one from the other. That gives us the numerator of a fraction. We then divide that number by a pooled standard deviation. We won‘t know if a difference in means is significant, unless we know something about variation. That’s what standard deviation tells us. Is a 10 point difference significant or not significant? Thalheimer and Cook show us how a pooled standard deviation is calculated. In the end, if we have a standard way of calculating significance – effect size – then we can analyze a group of studies even though individually they have different scores, ranges, means, and average departure from the mean.

As mentioned, meta-analyses draw their own criticisms. Two of the issues cited in meta-analysis.com, a proponent of meta-analyses, is that experiments that don’t show significant results are tucked away in file drawers collecting dust. That introduces a bias in the published research. It is called, logically, publication bias. Secondly, meta-analyses may combine apples and oranges. The following link explains the shortcomings in more detail.

https://www.meta-analysis.com/downloads/criticismsofmeta-analysis.pdf

Despite the criticisms, meta-analyses can provide convincing support for a treatment and results that are generalizable beyond the context of any particular study.

Meta-analysis can also give us insight into instructional strategies that make a difference. In a paper titled Comparative effectiveness of instructional design features in simulation-based education: Systematic review and meta-analysis, published by the National Center for Biotechnology Information, the authors analyzed 289 studies that involved more than 18,000 trainees. The following instructional strategies were found to be relevant in simulation based education: range of difficulty, repetitive practice, distributed practice, cognitive interactivity, multiple learning strategies, individualized learning, mastery learning, feedback, longer time, and clinical variation. Note the inclusion of practice, feedback and cognitive interactivity. Engaging students with content in a way that makes them think is effective!

These themes come up again and again in the research. Create activities that require students to apply what they have learned, make decisions or choices or perform some sort of action, get feedback and apply that feedback in future activities until mastery has been achieved. Vary the difficulty; make them think; make them practice; provide feedback and support mastery. It is still difficult to determine whether its worth the effort to construct such activities – but at least we are on the right track.

Activities that require students to perform and receive feedback can be fairly efficiently created. Higher order activities take more time. What does the research suggest in terms of the effectiveness of higher order activities. One such activity is the interactive case study. Let’s look at the case study in some depth.

In a paper titled Effectiveness of case-based teaching of physiology for nursing students published in the Journal of Taibah University Medical Sciences, the authors reported that

The performance in tests was statistically significantly better after didactic lectures (mean, 17.53) than after case-based teaching (mean, 16.47) (two-tailed p = 0.003). However, 65–72% of students found that case-based teaching improved their knowledge about the topic better than lectures.

|

Teaching method |

Mean |

SD |

SEM |

p |

|

Didactic |

17.53 |

3.58 |

0.38 |

0.003 |

|

Case-based |

16.47 |

3.69 |

0.39 |

The first part doesn’t sound very supportive. Students performed poorer in the treatment that included case based teaching method. This underscores one of the challenges of measuring the effectiveness of a treatment like case studies, or problem-based learning or decision-making scenarios and other higher order activities. If I simply taught to the test, students might perform better on the test than engaged in a case or some other ‘indirect’ activity. But what is the effect on satisfaction or long-term retention or transfer of knowledge to the work setting? The research may exist and may answer that question, but the quest for that insight is long and arduous.

The author of the above study conceded that several studies contradicted his findings. In fact, there are several research studies that support the use of case studies in both online and face-to-face settings. The following study concluded that case studies were effective whether created by the instructor or a third-party: Case Study Teaching Method Improves Student Performance and Perceptions of Learning Gains (Kevin M. Bonney, 2015).

The impact of the case study method was significant. It produced a two grade increase.

The author wrote:

Although many instructors have produced case studies for use in their own classrooms, the production of novel case studies is time-consuming and requires skills that not all instructors have perfected. It is therefore important to determine whether case studies published by instructors who are unaffiliated with a particular course can be used effectively and obviate the need for each instructor to develop new case studies for their own courses.

This is significant. Case studies were found to be effective, whether created by the instructor of the course or by an instructor unaffiliated with the course. This supports the use of activities gleaned from content repositories. Case studies, however, are not equally available in all disciplines. In the sciences, instructors can find cases at the National Center for Case Study Teaching in Science. The most difficult and time-consuming challenge related to case studies is in their creation – just getting it down on paper.

At the National Center for Case Study some of the work has been done for you. A case on climate change, for example, provides background information on the meaning of climate change and how we know that it is occurring. The case study places the student in the role of an intern to a US senator. The job of the intern is to help the senator understand the science behind client change and the impact of climate change on the planet. The student is engaged in a number of questions that require some analysis and charting.

The results of Professor Bonney’s research are taken verbatim from the author.

To evaluate the effectiveness of the case study teaching method at promoting learning, student performance on examination questions related to material covered by case studies was compared with performance on questions that covered material addressed through classroom discussions and textbook reading. The latter questions served as control items; assessment items for each case study were compared with control items that were of similar format, difficulty, and point value . Each of the four case studies resulted in an increase in examination performance compared with control questions that was statistically significant, with an average difference of 18%

In the following study Effectiveness of integrating case studies in online and face-to-face instruction of pathophysiology: a comparative study (http://advan.physiology.org/content/ajpadvan/37/2/201.full.pdf) we learn the following:

- Students who enjoyed the case studies performed better.

- Students like case studies because they could apply what they learned

- The reasons why students liked case studies had nothing to with whether they were in a face-to-face or online class

- Students who expected to earn better grades as a result of the case, did actually earn better grades.

Efficiency

Concerning to me is the amount of time that it takes to generate the interactive case study. Because of this concern, we are investigating and piloting the use of templates – but not at the expense of student performance and satisfaction.

At our university, we recently developed two versions of an interactive case study to promote the use of a standardized classification system to document, classify, and communicate health-related issues such as Latent Tuberculosis Bacterial Infection. In a future post, I’ll write about the two versions and their effect on student performance and student satisfaction. One version came from a template and involved more student reading. The other version sequenced audio with the presentation of content and made more use of graphics. The premise is that the templated version can be created quicker and could be generated by an instructor rather than an instructional technologist. We’re looking at whether that ease and speed came at the price of student performance and satisfaction.

If we are made confident by research that interactive case studies improved both student performance and satisfaction, and if case studies can be generated effectively and efficiently through a templated approach, then we can improve on our return on investment.

We could also further efficiency by adopting cases from case libraries. In our standardized classification system example, 18 cases are available at the Omaha System – a vendor site:

http://www.omahasystem.org/casestudies.html

Related to science case studies, I have already mentioned the National Center for Case Study Teaching in Science – but there are other resources that might uncover case studies in other disciplines. Examples include the learning object repositories like Merlot (www.Merlot.org) and OER Commons (www.oercommons.org).

Again, the repositories supply the content. Couple the content with an eLearning authoring tool like Captivate, StoryLine, LodeStar or whatever to make it interactive and you might be able to produce an effective instructional component efficiently.

Conclusion

I set out to find research that contradicted my belief that learning interactions are useful and represent a good return on investment. I found that research. One can find examples that show discouraging results – but these are the exceptions. I found much more research that underscores the effectiveness of learning interactions, whether they be simple question items or sophisticated case studies. Now the focus should shift to producing these learning interactions efficiently.

My personal belief is that in higher ed we are at an important junction. We can concede this sort of development to the book publishers – or we can figure out ways to encourage instructors to build learning interactions and add value to their courses – for the benefit of online students.