Mobile Learning means much more than easy access to responsive educational applications from a smartphone or tablet. It is an amazing confluence of technologies that represents a new era in technology-assisted instruction. Researchers have a name for technologies that bring us new capabilities. They call it affordances. I once hated the word. But now I embrace it. Recent advances in technology afford designers new opportunities to engage students.

New technologies bring new capabilities and help us redefine what is possible. When we had our shoulder to the wheel, working with computer-based training, floppy disks and stick figures, we looked up and saw the approach of interactive video disc players, and imagined the possibilities. We worked with videodiscs for a time and then saw the virtue of CDROMs. We gave up full-screen full-motion video for the ease of use of the CDROM and bought our first single speed burners for $5,000. The CDROM gave way to the internet and the web application. Flash based applications on the web gave way to HTML 5. And now, the desktop is making room for the mobile app and the mobile browser experience.

We always lose something – but gain something more important in return. New technology affords us new capabilities, new opportunities

Organization

To make best use of these capabilities, mobile learning demands that we think about old ideas in new ways. To use a simple example to start, our current projects may have forward and back buttons that chunk the content in nice bite-sized pieces. We recognize that chunking can be useful to learners. But mobile users are in the habit of swiping up and down and sideways. Content is laid out for them in one long flow or in slides. Chunks on the screen are the result of how aggressively users swipe their fingers. It challenges us to think about organizing content in a new way.

Responsiveness

Mobile apps, whether run in a browser or natively on the mobile device’s operating system, must conform to all sorts of device shapes and sizes. They call that form factor. The iPhone alone comes in multiple sizes ranging from 4 to 5 ½ inches. There are smartphones, phablets, mini-tablets and large tablets. There are wearables and optical displays. An application may be run on anything from a multiscreen desktop configuration to the smallest smartphone. An application may be viewed in portrait mode (vertical) or landscape (horizontal). The ability of a single application to conform to all of these display configurations is called responsiveness. Responsively designed applications automatically size and scale the views, pick readable font sizes, layout components appropriately and provide for easy navigation.

Responsive Application Created with LodeStar Learning FlowPageMaker

Designing Mobile Learning Experiences

But the challenge of mobile is not just in screen sizes and navigation. It is in the appropriate design of applications pedagogically. When we moved from computer-based training to videodisc we considered the power of full motion video and the ability of the learner to make decisions and indicate those decisions by touching the screen and causing the program to branch. When we moved to CDROM we made use of 640 megabytes of data – which seemed massive but afforded us embedded encyclopedias and glossaries and other information and media at our fingertips. When we moved to the web, suddenly WebQuests harnessed the full power of the internet and sent learners on inquiry-based expeditions for answers.

But what now? What are the opportunities that mobile devices give us – in exchange for extremely small screen sizes, slower processors and slower connectivity?

Part of the answer lies in student access to resources when they are on a bus or on lunch break – spaces in their busy lives. The more interesting answer is access to resources and guidance from environments where learning can happen: city streets, nature trails, museums, historical and geographical points of interest – in short, from outside of the classroom and the home office.

This is what mobile learning – M-Learning – is all about. M-Learning requires much more from applications than being responsive. They should support students being disconnected from the internet. They should support a link back to the mother ship – the institutional learning management system – once students are reconnected. They should report on all forms of student activity. They should report on not just quiz scores – but what students have read or accomplished or what a trained person has observed in the performance of the student.

Responsiveness is an important start – but this added ability to report remotely to a learning management system is facilitated by one of several technologies that are somewhat closely related. You may have heard these terms or acronyms: Tin Can, xAPI, IMS Caliper and CMI5.

To really appreciate the contributions of these standards to the full meaning of mobility, we need to do a deeper dive into the standards. Bear with me. If you haven’t heard of these terms, please don’t be disconcerted. They represent a tremendous new capability that goes hand-in-hand with mobile devices that is best explained by the Tin Can telephone metaphor. If you haven’t heard of these terms, you are in good company. We’re only on the leading edge of the M-Learning Tsunami.

Tin Can

Tin Can was the working title for a new set of specifications that will eventually change the kinds of information that instructors can collect on student performance. To explain, let’s start with the basic learning management system. In the system, a student takes a quiz. The score gets reported to the grade book. The quiz may have been generated inside the learning management system. The student most likely logged into the system to complete the quiz. But quizzes are just one form of assessment and no learning management system has the tools to generate the full range of assessments and activities that are possible. Not Blackboard. Not Moodle. Not D2L. Hence, these systems support the import or the integration of activities generated by third party authoring tools like Captivate, Raptivity, StoryLine, LodeStar and dozens and dozens of others. With third-party tools, instructors can broaden the range of student engagement. Learning management systems support tool integration through standards like Learning Tool Interoperability (LTI), IMS content packages and a set of specifications called SCORM. SCORM has been the reigning standard since the dawn of the new millennium. SCORM represents a standardized way of packaging learning content, reporting performance, and sequencing instruction. SCORM is therefore a grouping of specifications. Imagine packages of content that instructors can share (Shareable Content Object) and that follow standards that make them playable in all of the major learning management systems (Reference Model).

But SCORM has its limitations. The Tin Can API is a newer specification that remedies these limitations. A SCORM based application finds its connection (an API object) in a parent window of the application. That’s limiting. That means that the application has to be launched from within the learning management system. Tin Can enabled applications can be launched from any environment and can communicate remotely to a learner record store. Imagine two tin cans linked by a string. One tin can may be housed in a mobile application, and the other tin can in a learner record store or integrated with a learning management system. The string is the internet.

SCORM has a defined and limited data set. An application can report on user performance per assessment item or overall performance. It can report on number of tries, time spent, responses to questions and dozens of other things but it is ultimately limited to a finite list of data fields. (Only one data field allowed arbitrary data, but it was really limited in size.)

Tin Can isn’t limited in the same way. Tin Can communicates a statement composed of a noun, verb and object. The noun is the learner. The verb is an action. And the object provides more information about the action. Jill Smith read ‘Ulysses’ is a simple example. Imagine the learner using an eBook Reader that communicates a student’s reading activity back to the school’s learner record store (housed in an LMS). Tin Can is M-Learning’s bedfellow. The mobile device gives students freedom of movement. Tin Can frees students from the Learning Management System. Any environment can become a learning environment. Learning and a record of that learning can happen anywhere.

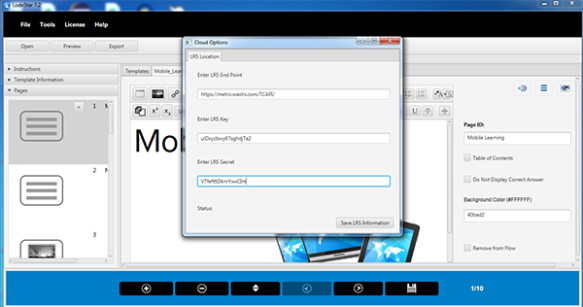

LodeStar Learning (LodeStar 7.2) Ability to configure an Learner Record Store Service (LRS) and Export to a Tin Can API enabled Learning Object

The next acronym, xAPI, is just the formal name for Tin Can. Tin Can was a working title. When I was at Allen Interactions working on ZebraZapps our team provided early comment related to this evolving specification – which became xAPI. The eXperience API is a cool term for a cool concept, but Tin Can has stuck as a helpful metaphor.

The openness of Tin Can, however, presents its own challenge. If one application reports on student reading performance in one way, and another application reports on a similar activity but in a different way, it is hard to aggregate the data and analyze it effectively. It’s hard to compare apples and oranges.

IMS Caliper attempts to solve this problem. IMS Global is the collaborative body that brought us standards for a variety of things, including learning content packages and quiz items. IMS Caliper is a set of standards that support the analysis of data. They define a common language for labeling learning data and measuring performance.

Which leads us to the last standard: CMI5. CMI5 bridges Tin Can with SCORM. Applications still benefit from the grade book and reporting infrastructure built around SCORM – but are free to connect remotely outside of the confines of the LMS — once again supporting M-Learning.

Had I written this entry a year ago, I would have found it difficult to try out various learner record stores. Today, they abound. The following link lists tools and providers: http://tincanapi.com/adopters/

The following two LRS providers give you an inexpensive service in order to test out this technology for yourself.

Rustici SCORM Cloud

Saltbox Wax LRS

So what?

Now that we’re free to roam around the world, what do we do with that? Mobile applications, even browser based mobile applications, use GPS, cell towers and WIFI to locate our phone geographically. We can construct location-aware learning. We can guide students on independent field trips. They can collect information and complete assessments of their learning. All of that can be shipped back to the institution through the learner record store. Mobile devices have accelerometers and gyroscopes that help the phone detect orientation (e.g. horizontal and vertical) and the rate of rotation around the x, y and z axes. With that we can create applications that assess the coordination of a learner in completing a task that requires manual dexterity. Devices have cameras and microphones, both of which can be used to support rich field experiences.

The smart pedagogy for M-Learning is one that recognizes these affordances and uses them – rather than shrinking a desktop experience into a smaller form factor.

An Example

Aside from our work at LodeStar Learning and at the university, my most recent encounter with this technology came from a serendipitous meeting with a local community leader who introduced me to Pivot The World.

Pivot The World http://www.pivottheworld.com represents an example of a good starting point. It is a start-up company interested in working with universities, museums, cities, towns and anyone interested in revealing the full richness of a location in terms of history and cultural significance. It combines the freedom of movement of a mobile device with its ability to detect location, overlay imagery and geographical information, and match what its camera sees to a visual database to retrieve related information. The combination of camera, maps, imagery, audio, location, and other services engage learners in a new kind of experience.

The Pivot The World founders and developers started in Palestine, have since applied their technology to a tour of Harvard University and are currently working with a volunteer group of history buffs to create a Pivot Stillwater experience in our own hometown. At the north end of town, where there are condominiums, a simple swipe of the finger can reveal the old Stillwater Territorial Prison with elements of the prison preserved in the design of the new site.

If a university or museum wished to keep a record of student or visitor experiences with the application, then an integration with the Tin Can (xAPI) would add that dimension. As users engaged with the content, statements of their experience could be sent to a Learner Record Store.

Conclusion

LodeStar Learning’s mission is to make these technologies and capabilities accessible to instructors. We have done that with the addition and improvement of our templates. We have incorporated the ability to export any learner object with Tin Can capability. Now instructors can choose between SCORM 1.2, SCORM 1.3, SCORM CLOUD, SimpleZip (for Schoology and other sites) and, most recently, TinCan 1.0.

We have improved Activity Mobile Maker and added ARMaker (for geographically located content) and FlowPageMaker for a new style of mobile design.

We’ve already gone global. Now we’re going mobile. We’re embracing M-Learning and all of its amazing affordances.