Robert N. Bilyk

President, LodeStar Learning Corporation

Introduction

With artificial Intelligence, new tools, and new standards there is a sea change that improves our ability to create simulations efficiently. I’ll take a moment to reprise my summary of the benefits and types of simulations and then dive into what we can do now that we could not do before — efficiently.

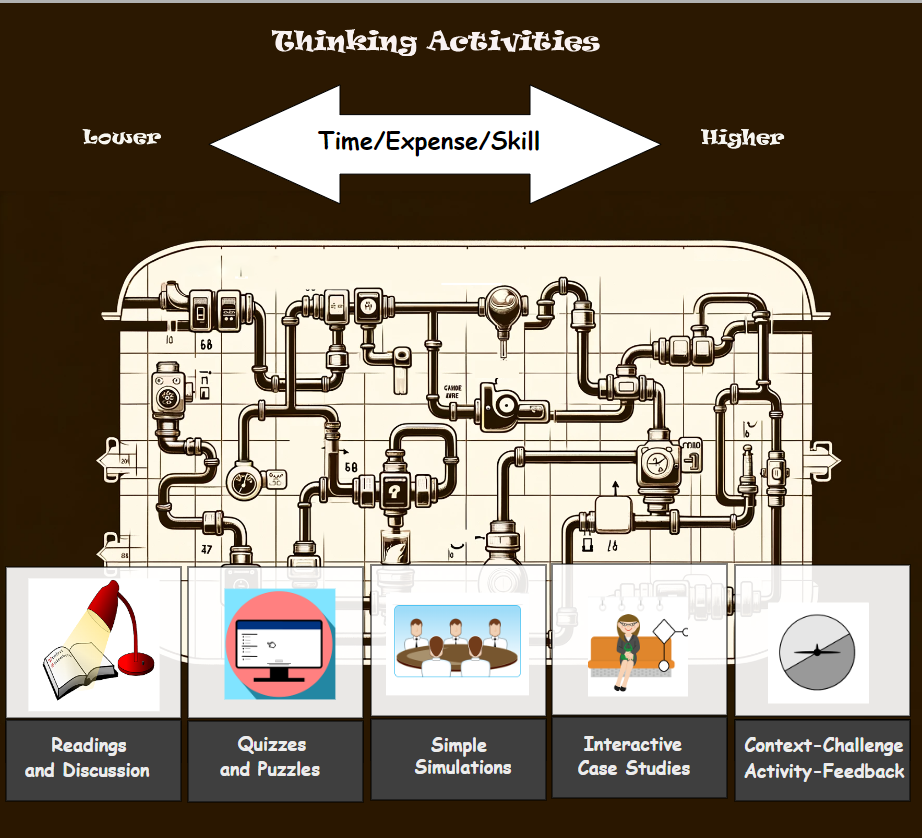

Why simulations

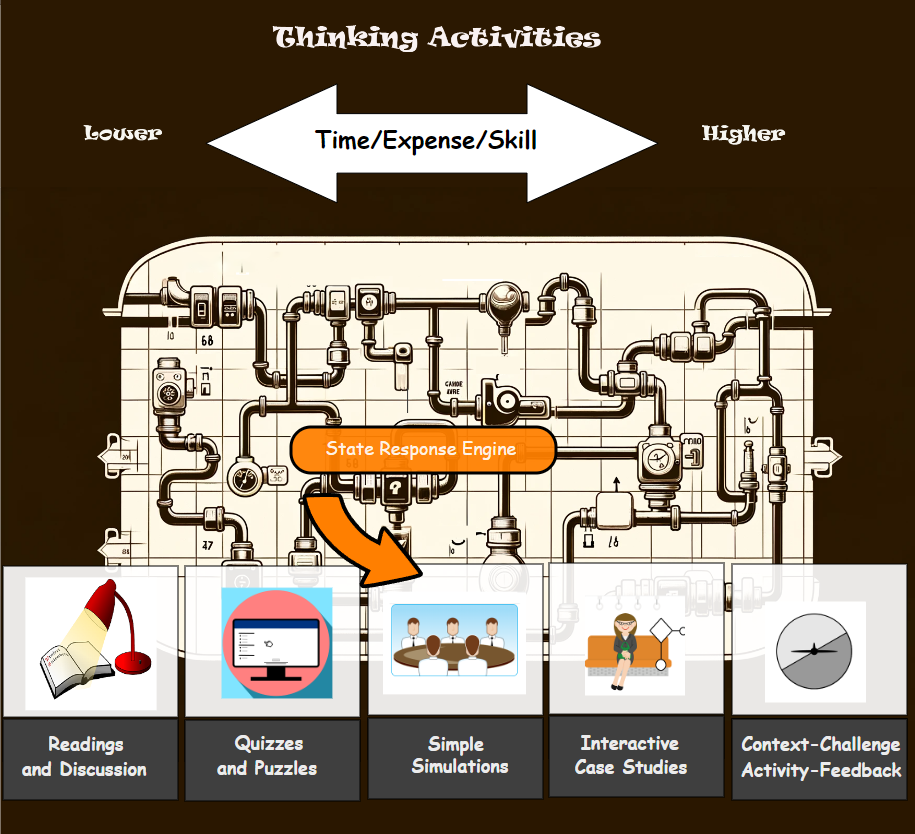

Simulations help learners draw upon their knowledge to make decisions in a ‘real-world’ setting. They stimulate recall of recent learning and past knowledge. They demonstrate how knowledge can be applied. They can simplify situations to support learning or they can tax learners with the complexity of challenging environments.

The term simulation can apply to a broad range of instructional activities. It can mean role-playing, a virtual lab, a decision-making scenario, a game, a diagnostic exercise, an immersive reality environment, and more. A simulation can range from a simple demonstration of how things work, like an electrical circuit, to a complex problem that invokes the learner’s critical thinking and analytical skills.

I’ll limit this discussion to decision-making scenarios, which can include simple simulations (or ShortSims) and highly complex interactive case studies.

Behavior mindset shift

AI, new tools and new standards dramatically lower the cost of building simulations. But it takes more than technology to turn the tide. Chrisopher Lind (host of Future-Focused1) and Michael Vaughn (CEO of The Regis Company) captured the issue in their recent conversation about simulations.

Michael Vaughn has been working in this space for years. In his conversation with Christopher Lind, he notes that:

“The first thing we realized when we put instructional designers into it [simulation] the reaction is always the same, they loved it because it was fresh, it was new, it was a new way of thinking about building things, and all that stuff was really exciting and then when they had to sit down and actually start building a simulation it was almost the deer in the headlights.”

He and Christopher agree that a lot of design has really been about information architecture and not the design of learning experiences. A simulation is a learning experience. They acknowledge that for years building simulations seemed beyond the capability and budget of learning and development professionals.

But that is no longer true.

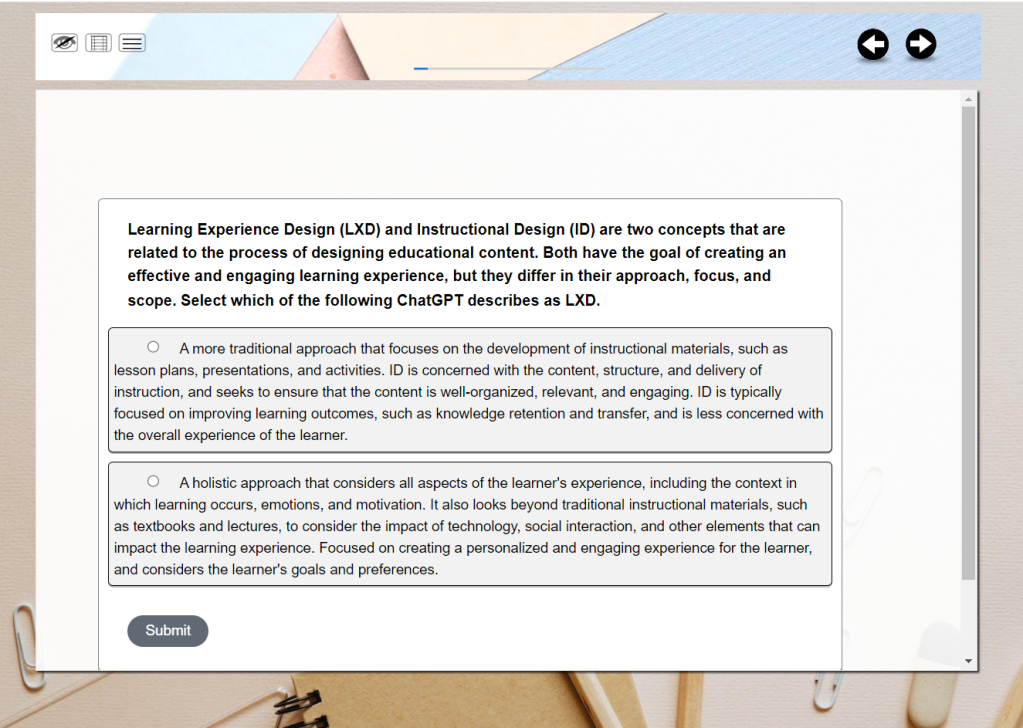

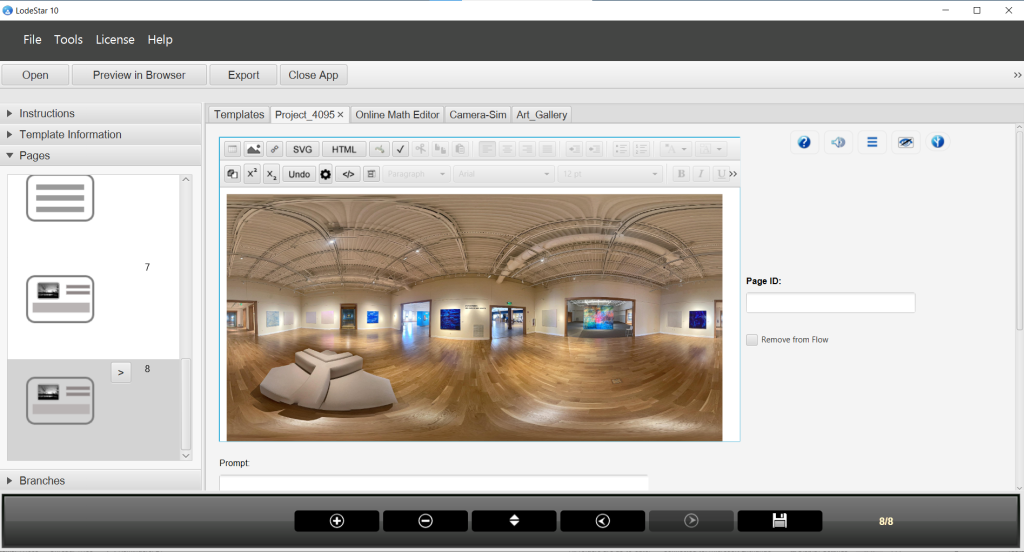

A simulation project

For my courses at Metropolitan State University in Learner Experience Design3, I have been contemplating a simulation that helps students apply what they’ve learned from my presentations, the texts, and the examples. So, I’ve been building a prototype. I’ll describe the prototype, what skills I needed, and how new tools and standards have helped me.

Let’s begin with the purpose of the simulation.

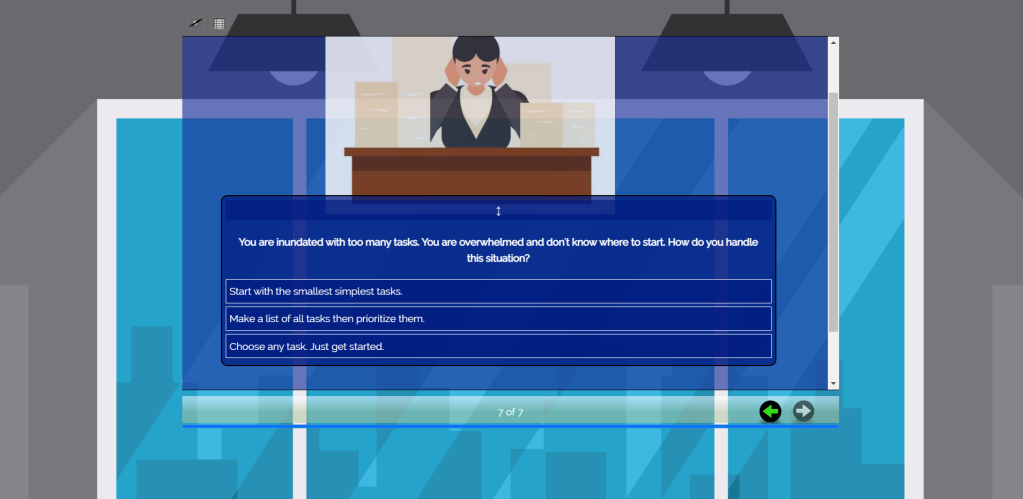

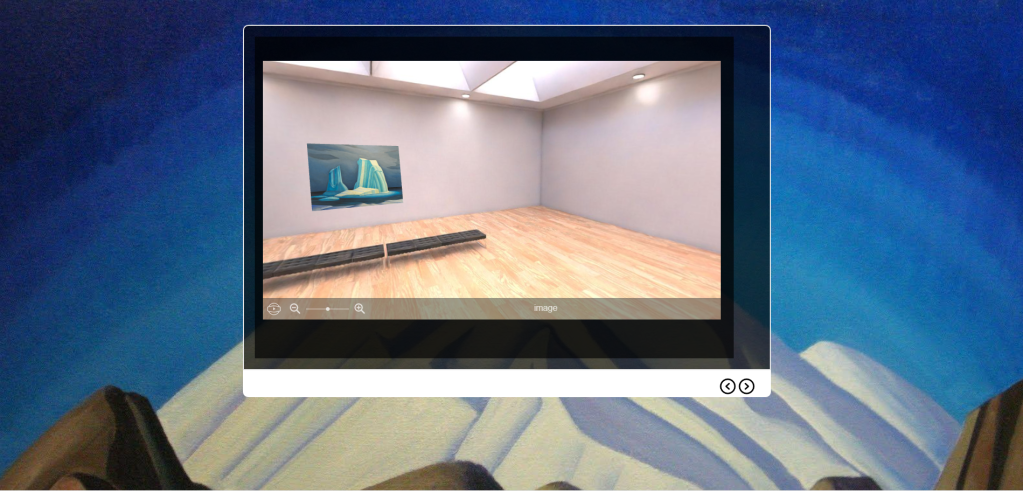

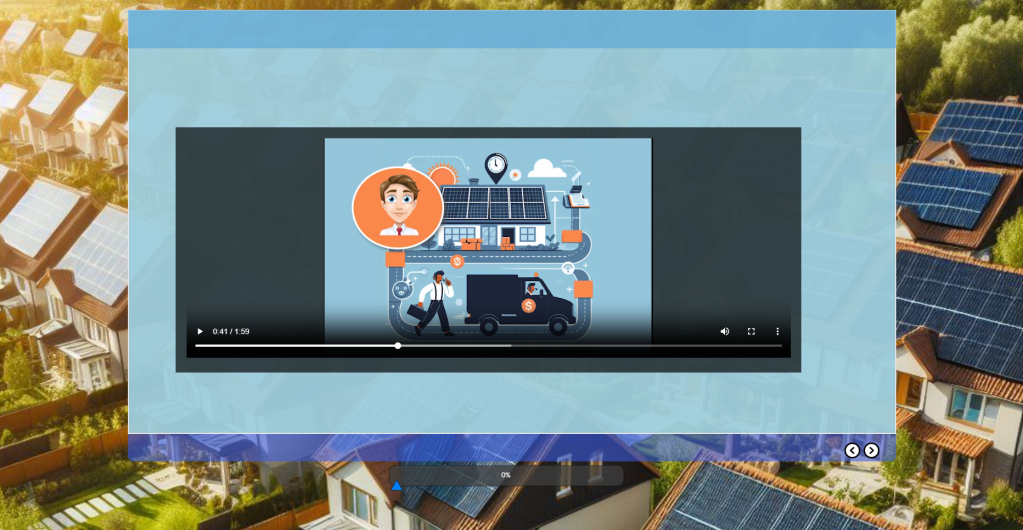

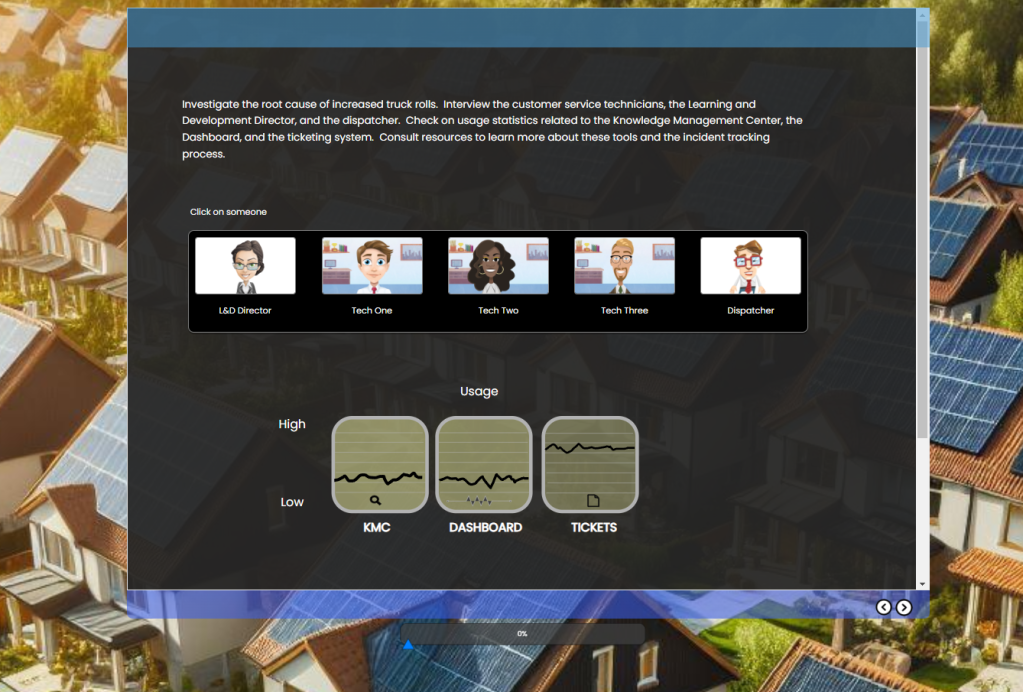

In my simulation I challenge students to develop training that helps a company solve a problem. As Paul Matthews2 writes, sometimes learning experience designers need to play the role of a performance consultant and dig for the root cause of a performance issue. In my simulation, you are asked to develop training for a residential solar panel installer. The fictitious company installs and services solar panels. The first tier of technical support for customers is the customer service technician. If the tech can’t solve the problem over the phone with basic problem-solving strategies and tools, then a truck and field technician are dispatched to the customer site. The company goal is to reduce what they call truck rolls. The goal is to save the company money.

The simulation randomly selects a scenario from a bunch of scenarios, each with a different root problem. Learners must interview technicians, the L&D director, and the dispatcher. They must look at field notes and the tool metrics to decide on the learning outcomes that will make a difference. Finally, they pick from a list. The outcomes on the list are a strong or weak match depending on the randomly selected scenario.

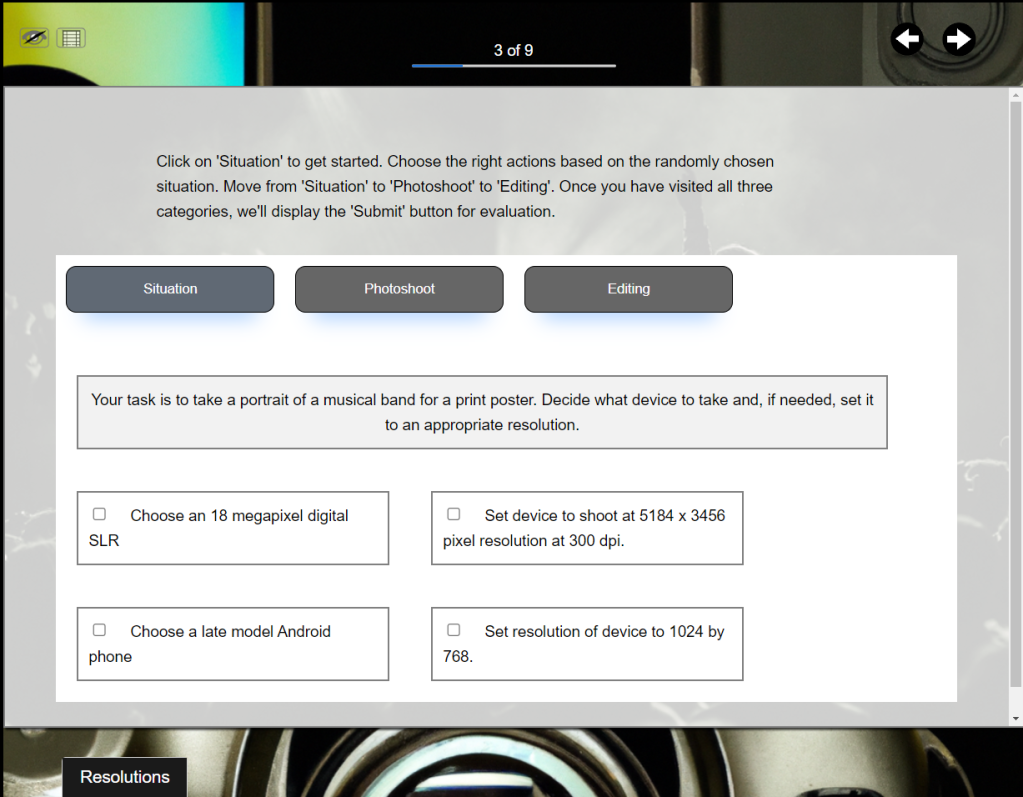

Let’s review some of the actions that are critical to an interesting simulation.

Defining a Challenge

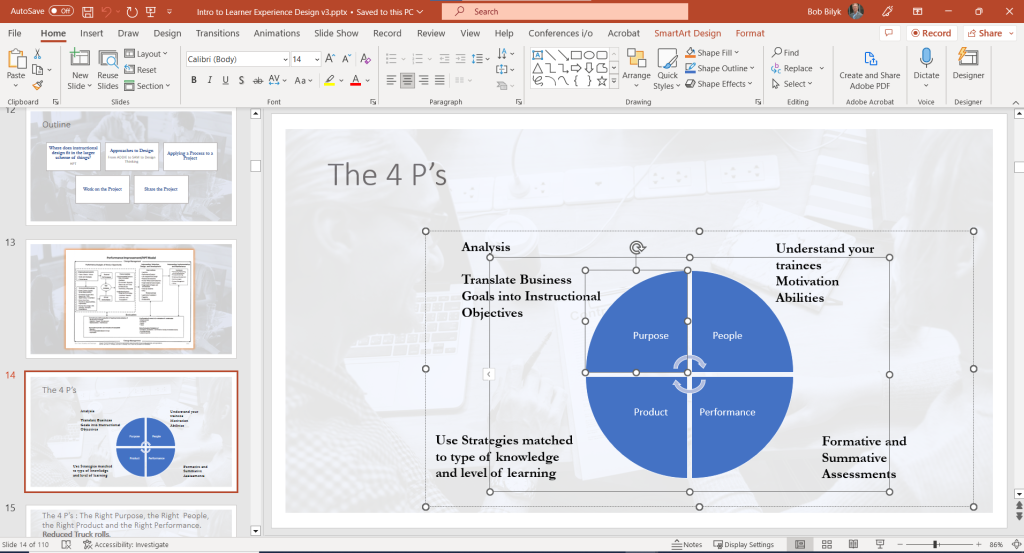

So, the first mindset shift is to think it terms of a problem and a challenge to present to the learner. That’s a little different than a presentation on an ADDIE Analysis or a presentation on Design Thinking Empathy. We’re not developing a presentation that competes with a hundred YouTube videos. We’re developing an experience.

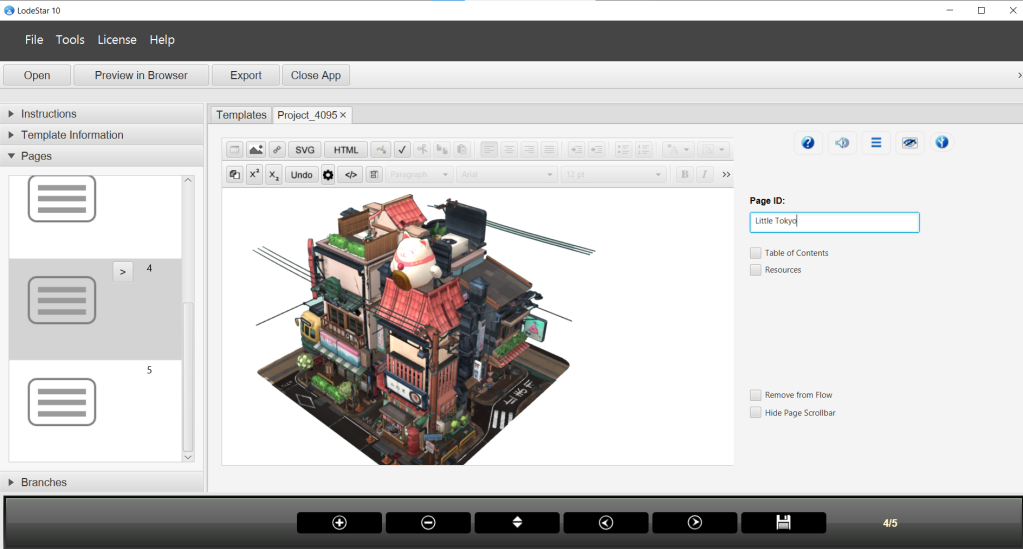

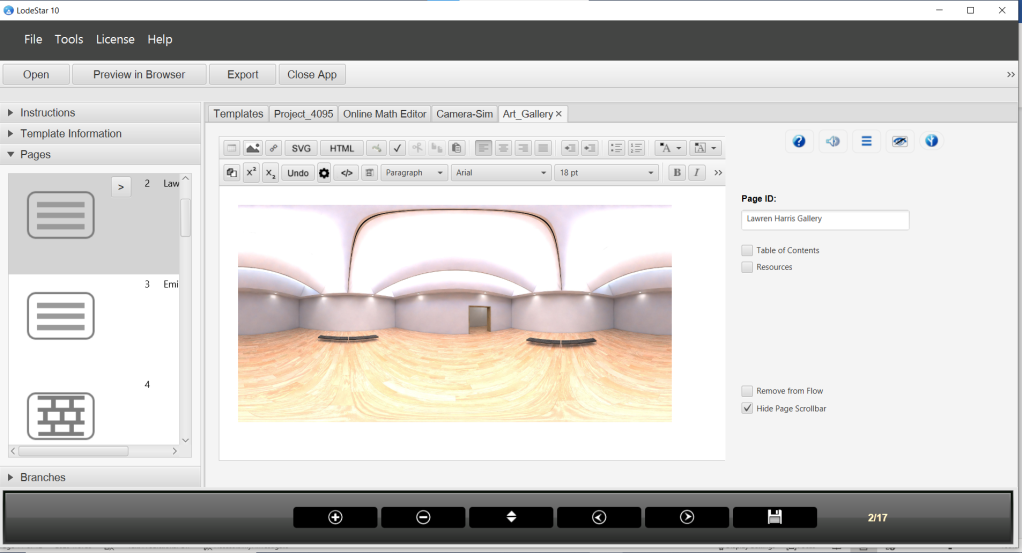

Creating Context

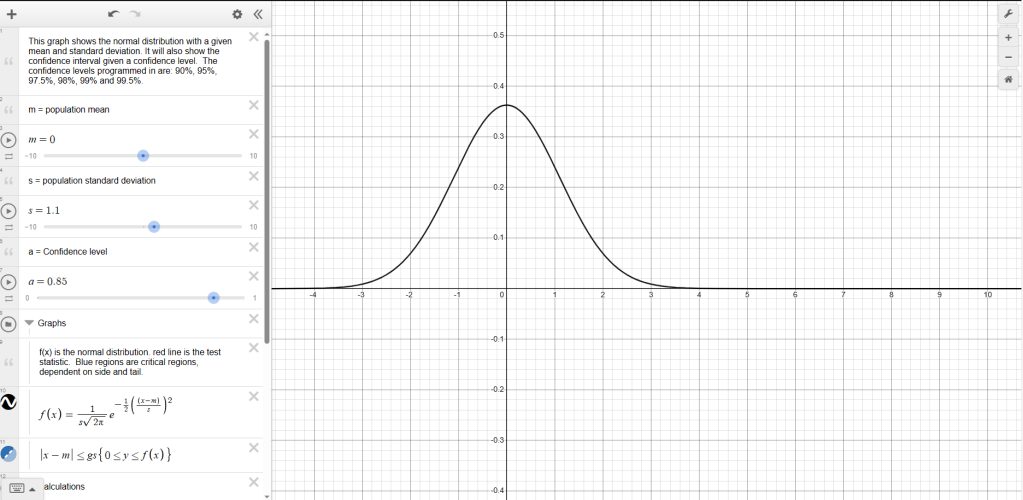

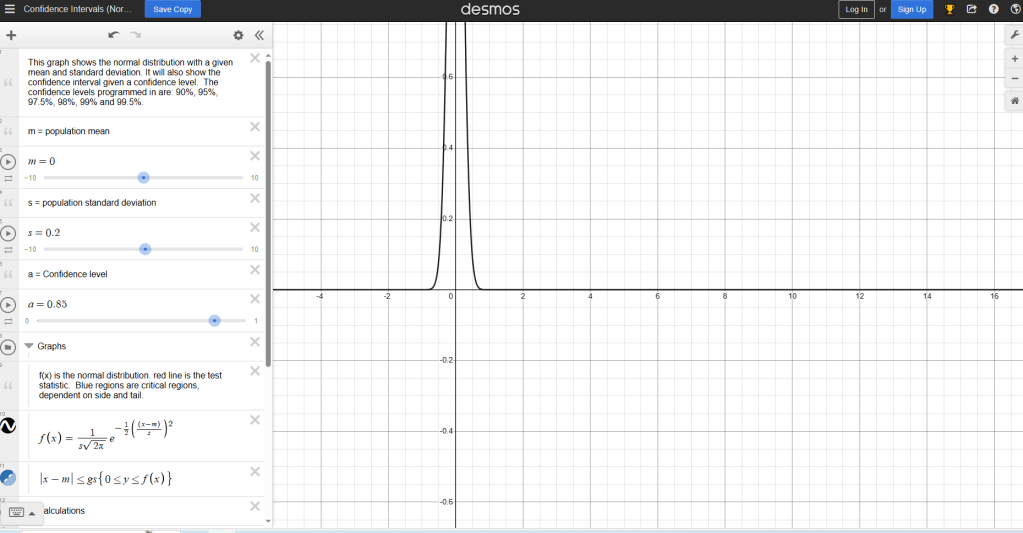

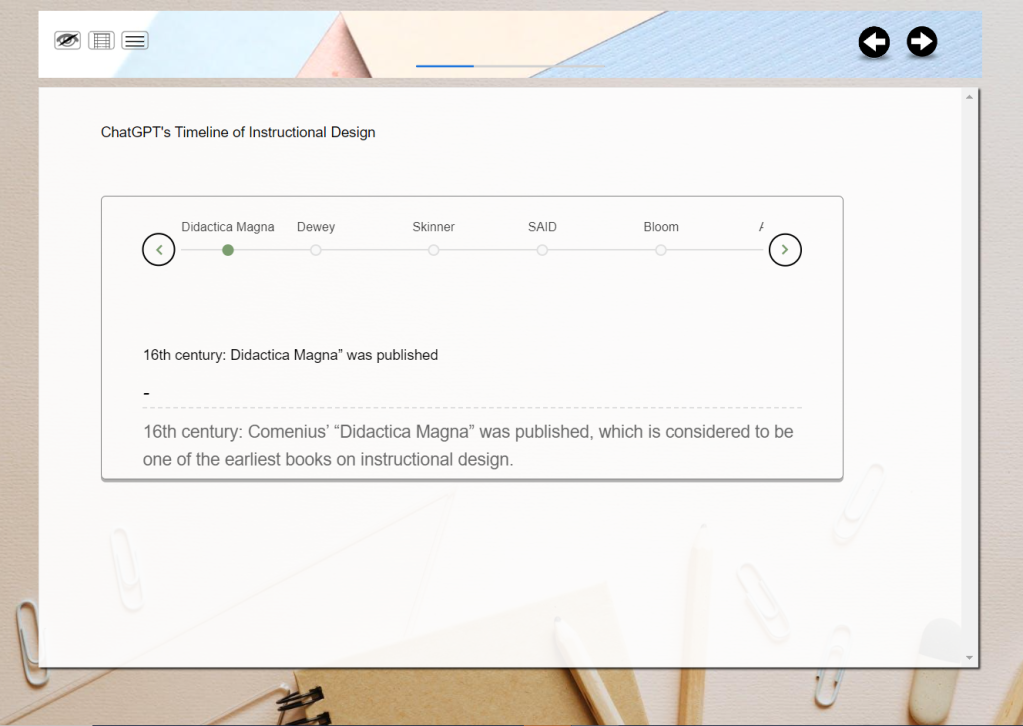

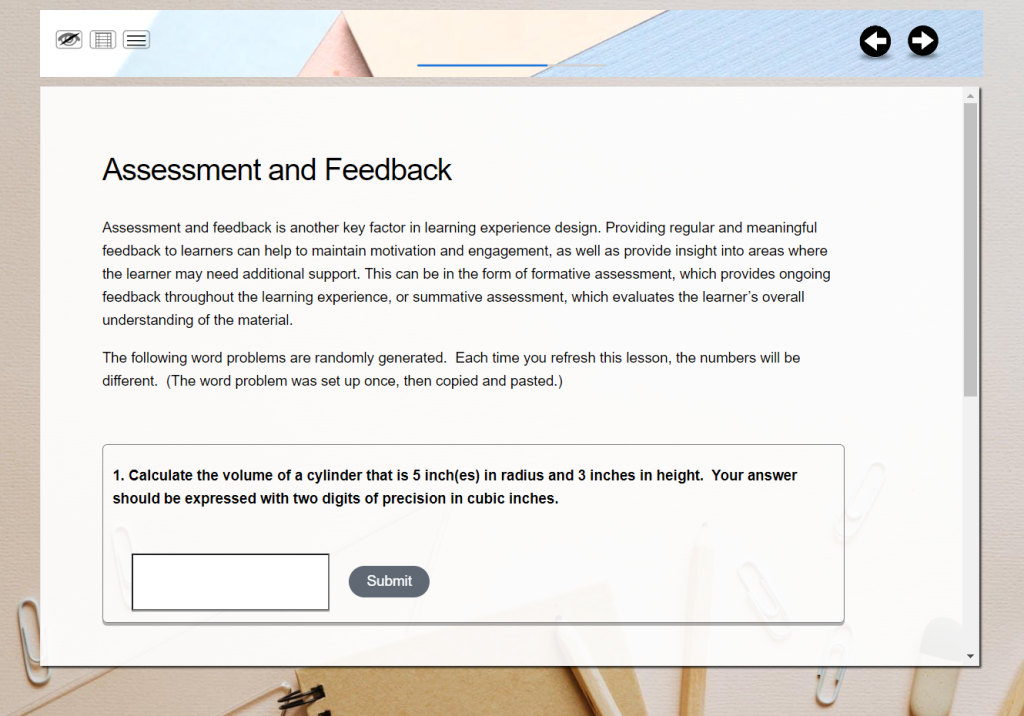

It’s been more than a year since AI was democratized by ChatGPT, DALL-E, and BARD. Now there are dozens of tools that allow us to generate text and images, convert text to speech, synchronize animations with sound, and more.

For my prototype, Bing Image Creator generated images related to solar panel installation. I tried to choose images that were both illustrations and stylistically consistent. That proved to be very challenging but the tools improve each week and, at this stage, I’m only developing a prototype.

I used ChatGPT to help generate common problems that homeowners experience with their solar panels. I didn’t use the ChatGPT content but it gave me ideas to dig into. The problems can range from decreased energy production to complete system failures. I gathered information from ChatGPT, solar panel installers, and my own experiences.

I asked Bing Image Creator (powered by DALL-E) to generate images of a customer service timeline and specific pieces of equipment like the inverter – a device that converts the current produced by a solar panel to one that will work in the home.

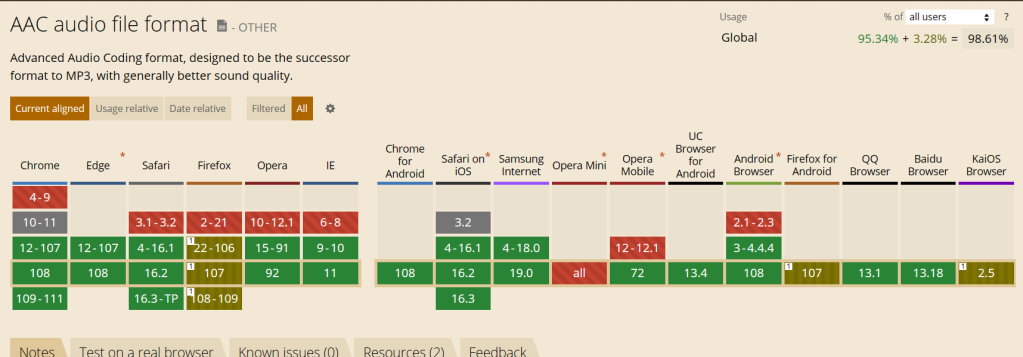

I assembled these images in my video editor and combined them with a script that was fed into a text-to-speech synthesizer. I used Amazon Polly at no cost, but there are dozens of inexpensive synthesizers available. They use AI to produce naturalistic sounding speech. The inexpensive ones aren’t perfect but for prototyping or for projects on a shoestring budget, they may be acceptable.

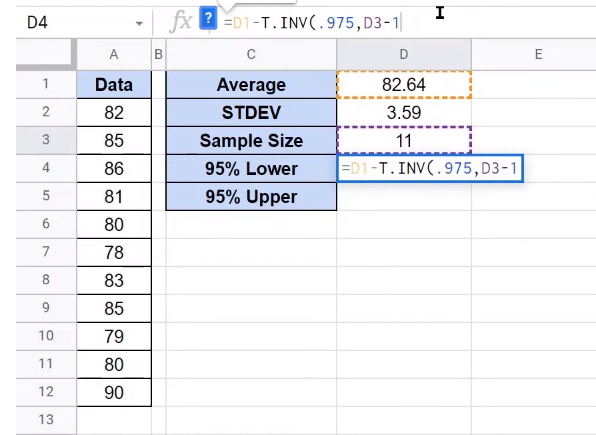

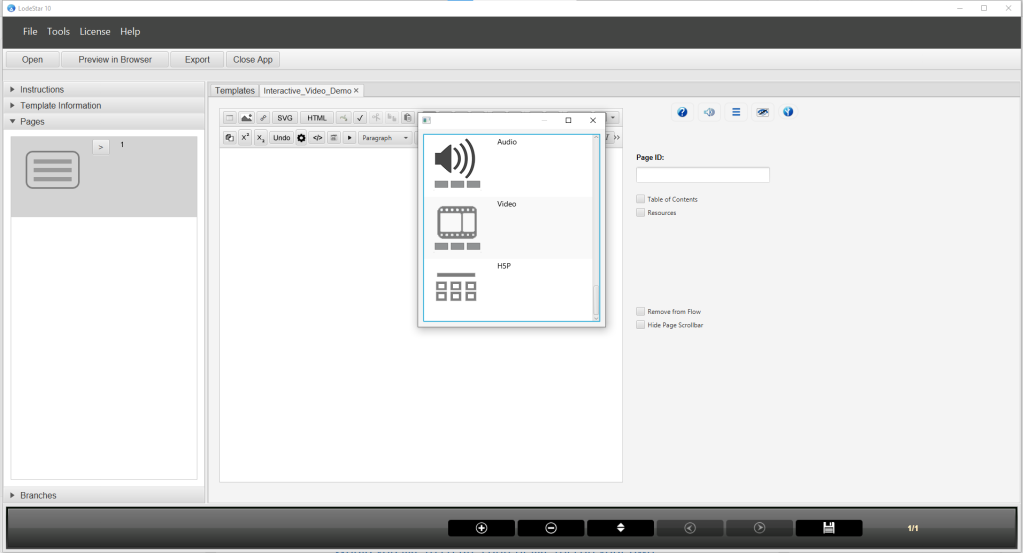

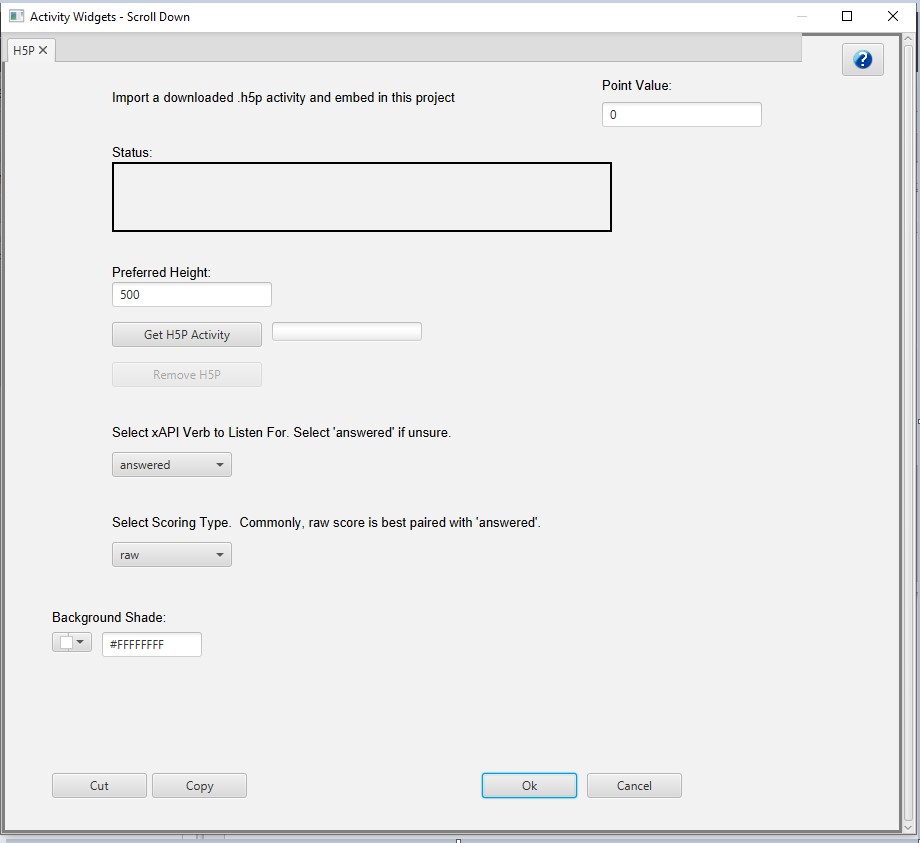

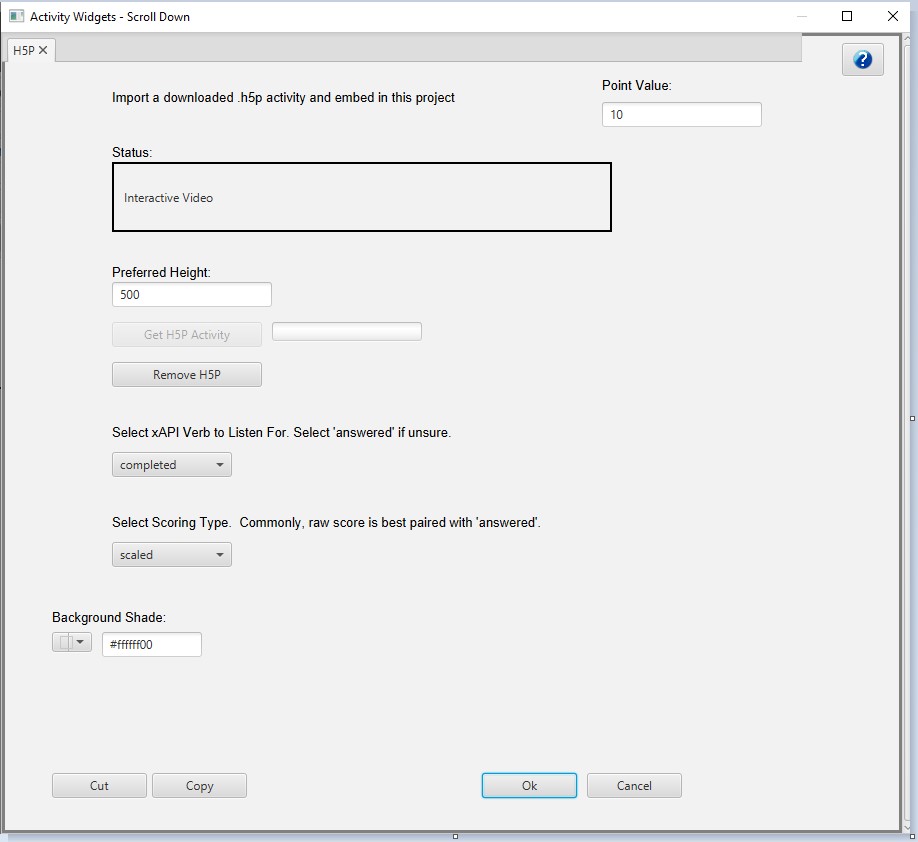

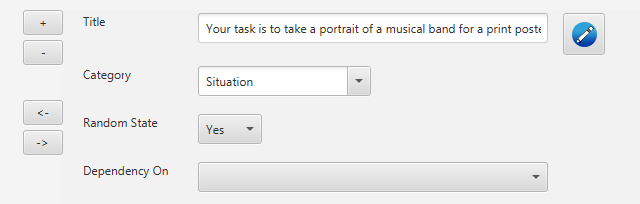

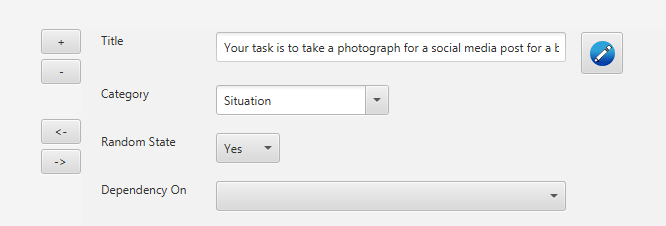

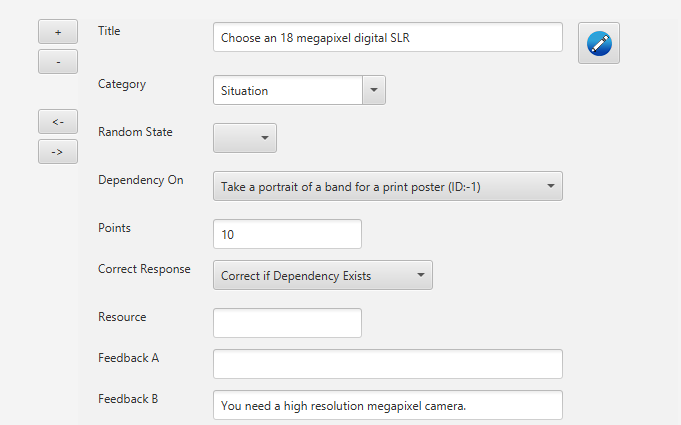

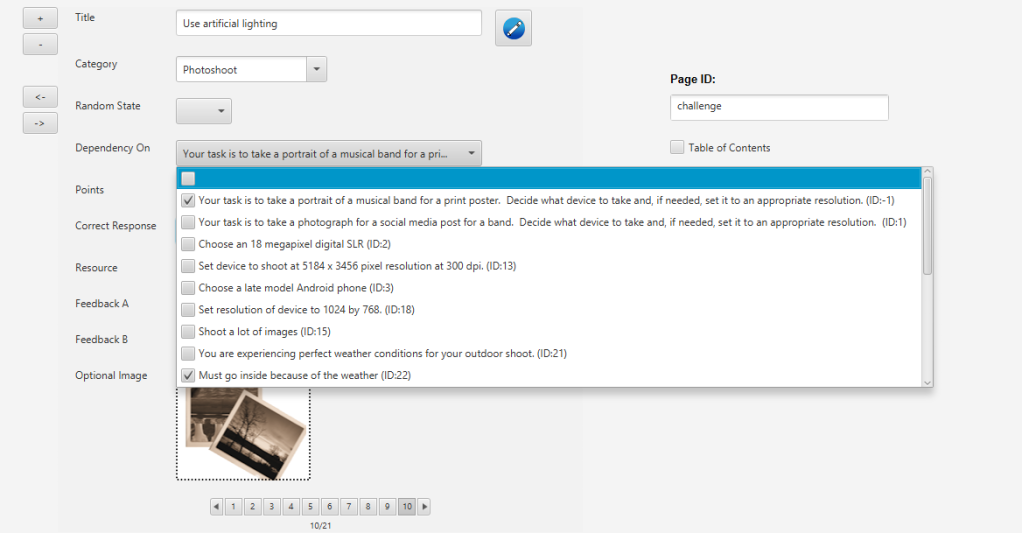

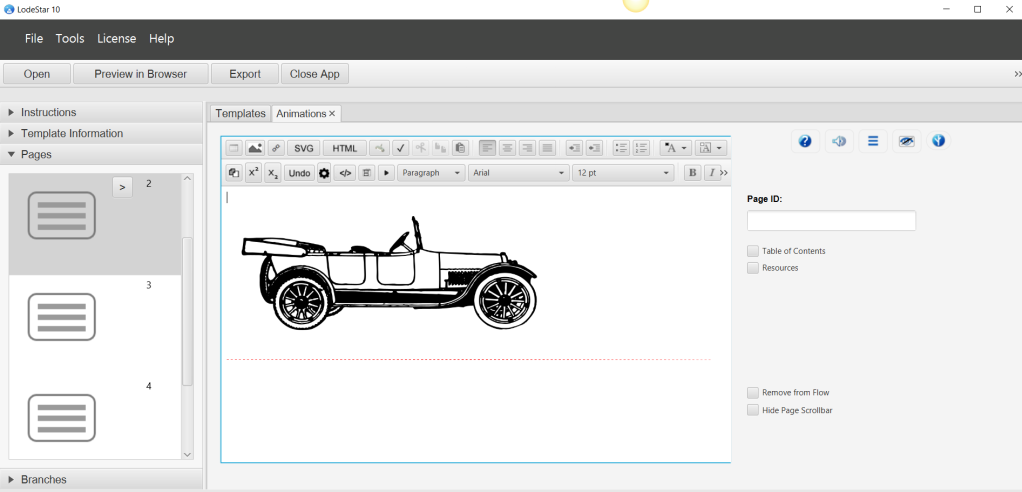

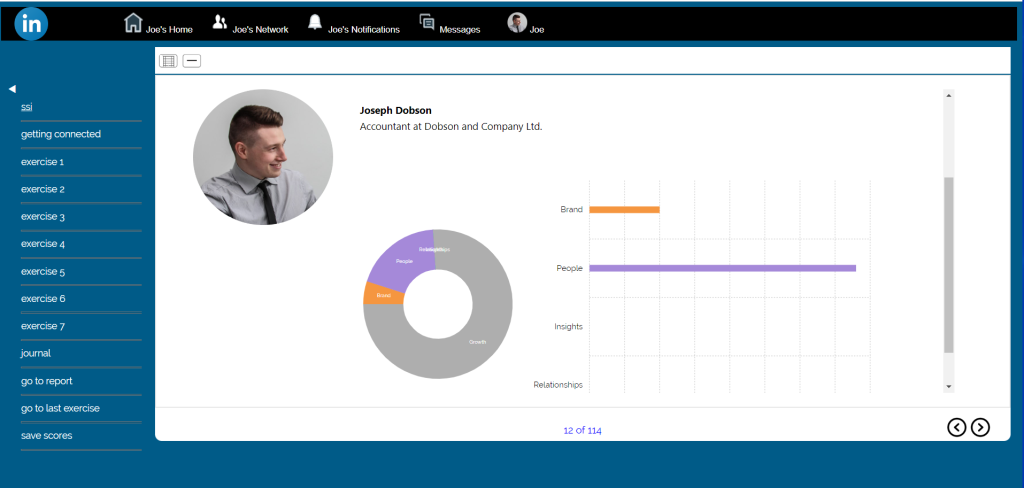

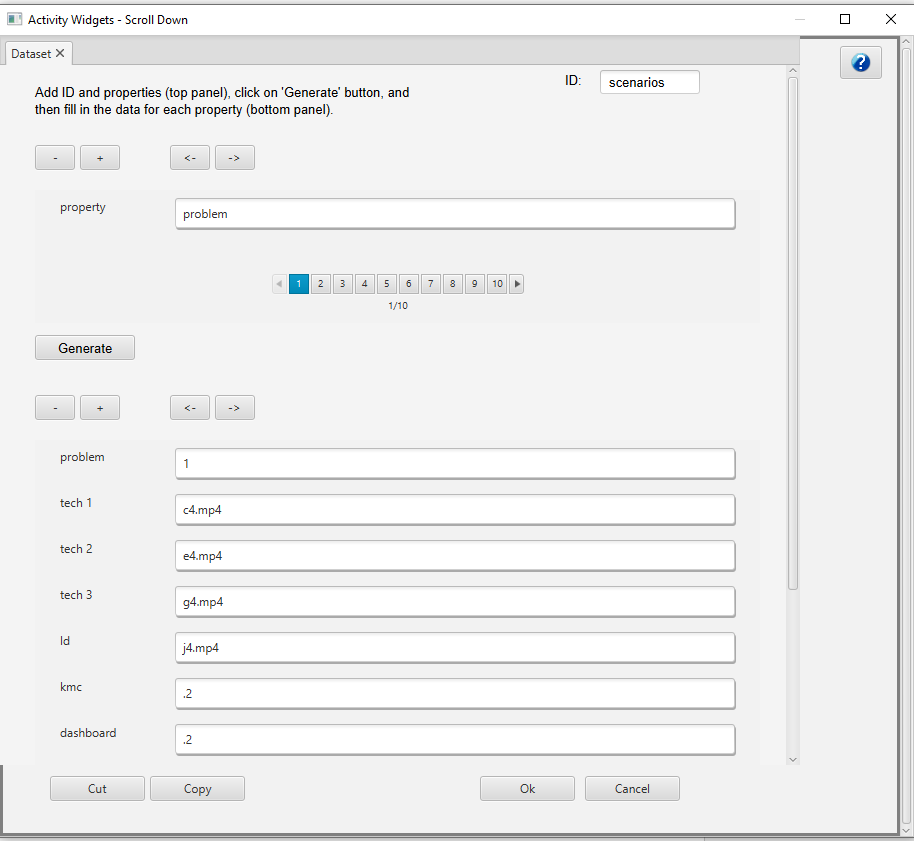

Creating randomly generated scenarios with datasets.

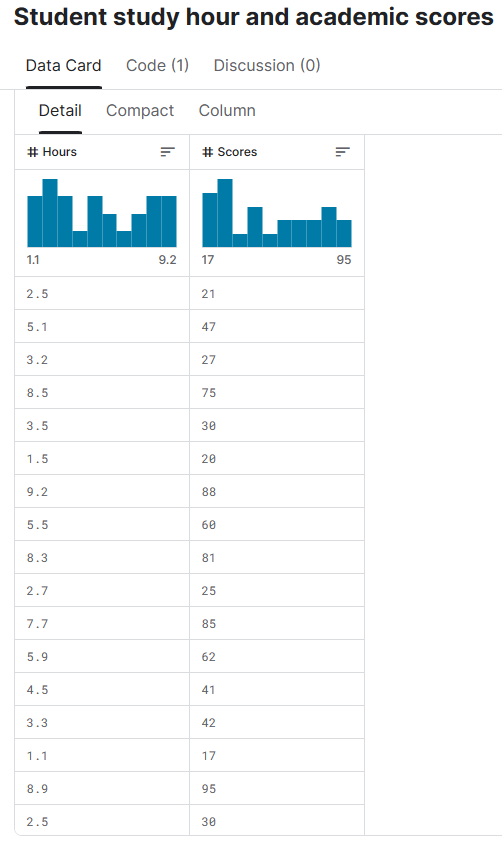

I used the LodeStar Dataset Widget to generate data. I first defined the properties or data fields (upper part), clicked the ‘Generate’ button to create the dataset and then filled in the values. In the screenshot below, I picked problem 1 and then identified the media that represents what each of the employees will say. Three of the fields also control the level of tool usage that is displayed in the scenario screen.

Scripting Randomization

In my case, LodeStar randomly pulls from the dataset so that these characters say something different each time, providing clues to the root problem. The level of usage of the tools (KMC, Dashboard, and Ticketing system) is also drawn from the dataset.

This is accomplished with a single line:

var scenario = getDataSet(“scenarios”, “-1”);

The -1 causes the engine to randomly select from a group of scenarios. Each subsequent call will return a new dataset from the remaining unused datasets.

LodeStar makes it easy, but the same thing can be accomplished with script that essentially does these things:

- Describes the scenario data in a machine readable format

- Adds each scenario to a collection

- Generates an unused random number and uses that to pull a scenario from the collection

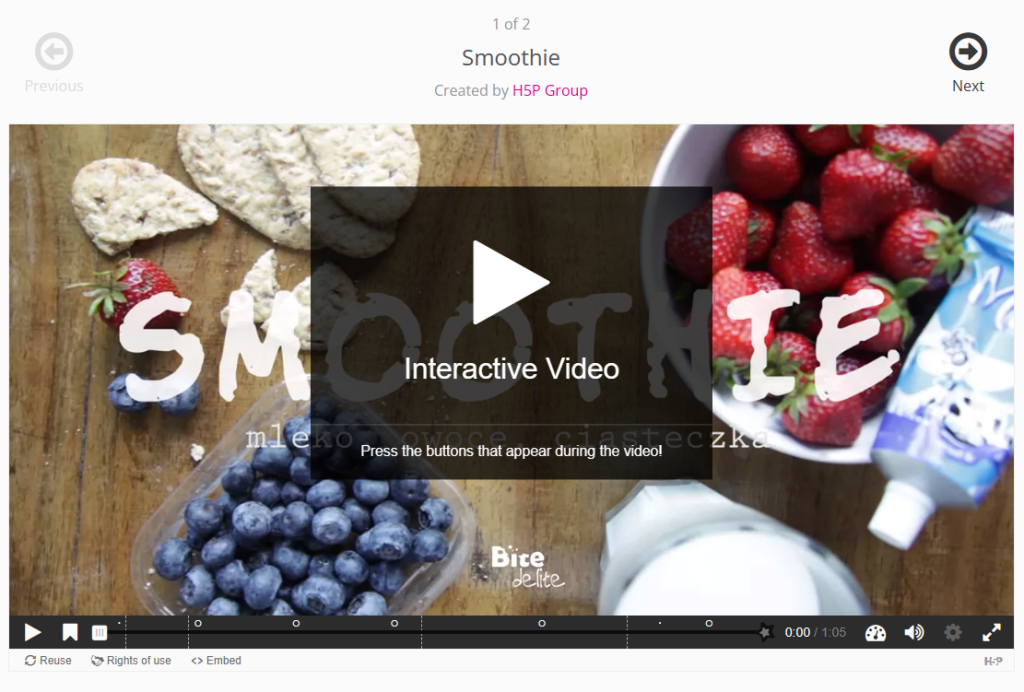

Using Voice Synthesis and Character Puppets

I used voice synthesis and animations to represent the technicians, dispatcher, and Learning and Development director.

I had a little fun with the next step. I added character puppets.

Character puppets are composed of rigging. Character rigging is a skeletal structure made of bones and joints. The puppet creator skins the structure with artwork. The more rigging a character has, the more complex and subtle movements they’re capable of.

The puppet artists create the skeletal structure, the joints and the artwork for these puppets as well as the visemes. A viseme defines the formation of the face and mouth while a person is speaking.

Here is where AI comes into play again. AI supports the analysis of the audio file and chooses matching visemes. In short, lip synching. In the past, there were several hokey tools that attempted lip synching. In the 90s when I lip synched characters to recorded speech, I needed to painstakingly morph visemes in a complex tool like 3D Studio and its predecessor. Today, the technology has improved and the effect is appearing less and less mechanical. In the coming years, along with the use of avatars, this technique will be more and more refined.

Displaying randomly generated elements

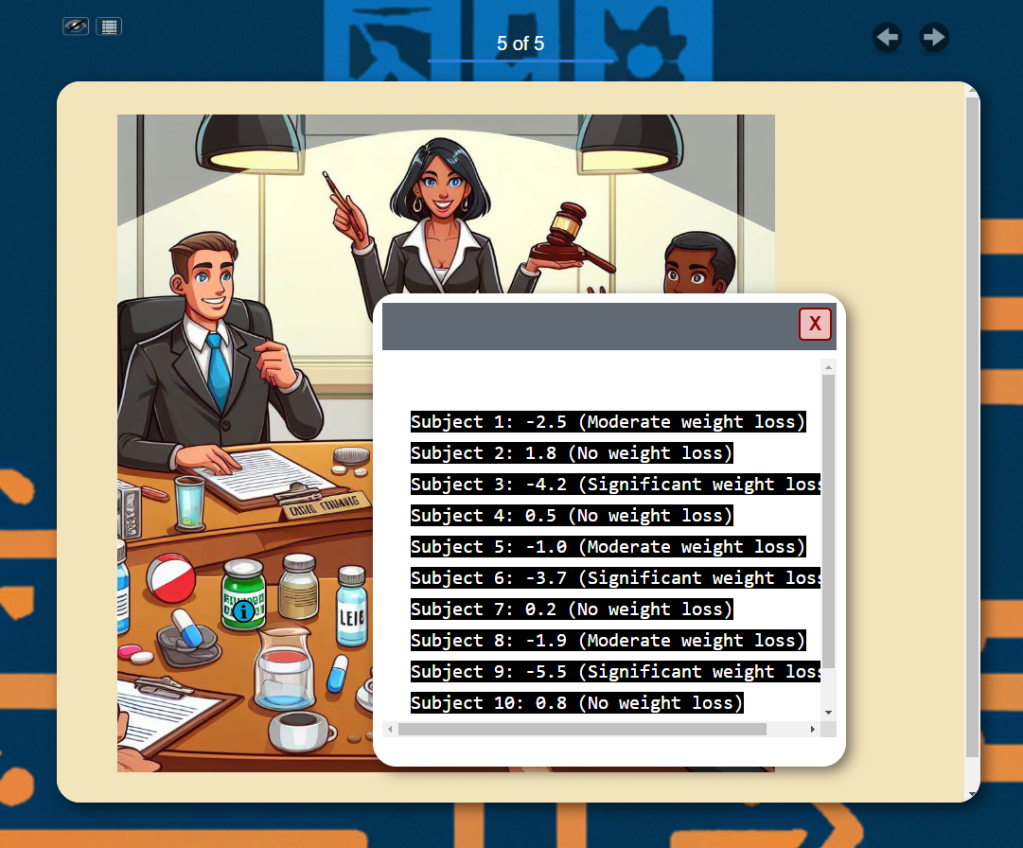

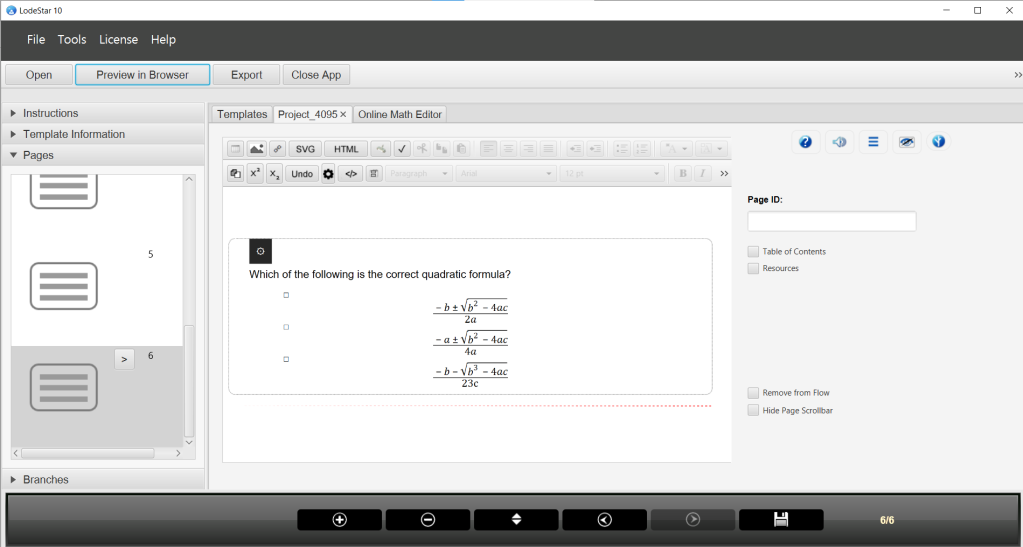

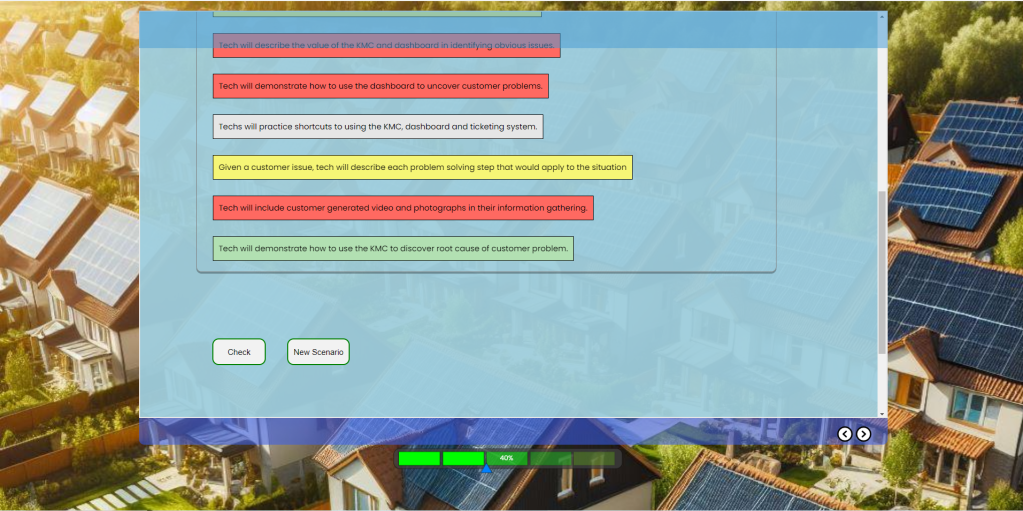

I displayed a list of learning outcomes in random order. I will challenge learners to choose the top five outcomes that address the underlying training problem out of list of 20. Their answers are evaluated based on the current scenario and the outcomes that are listed in the dataset. An outcome might be correct in one scenario and completely wrong in another. Just like real life. Also, as in real life, some of the information provided by the employees might be misleading. One technician may be performing correctly while the others may lack the knowledge, opportunity or motivation to perform correctly.

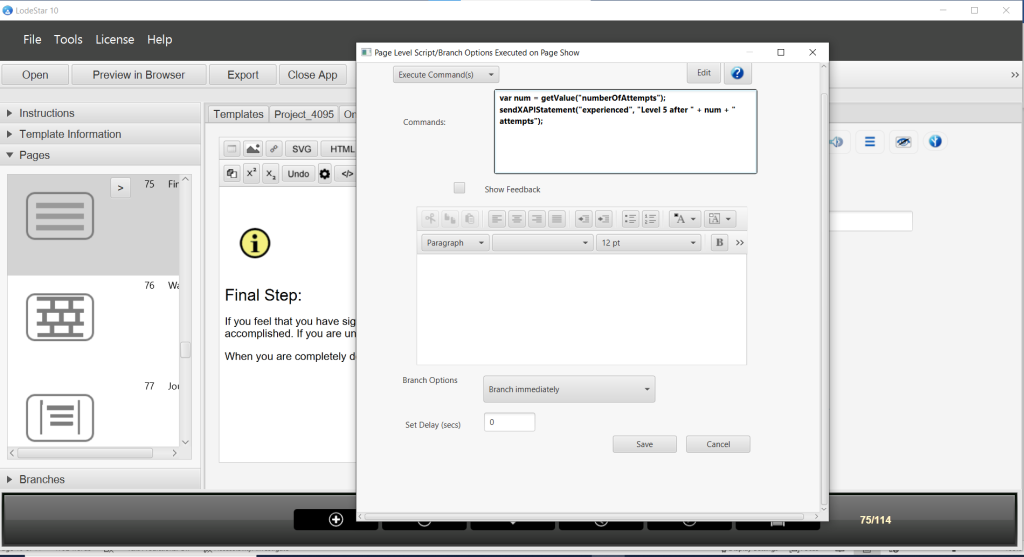

Coding for greater control

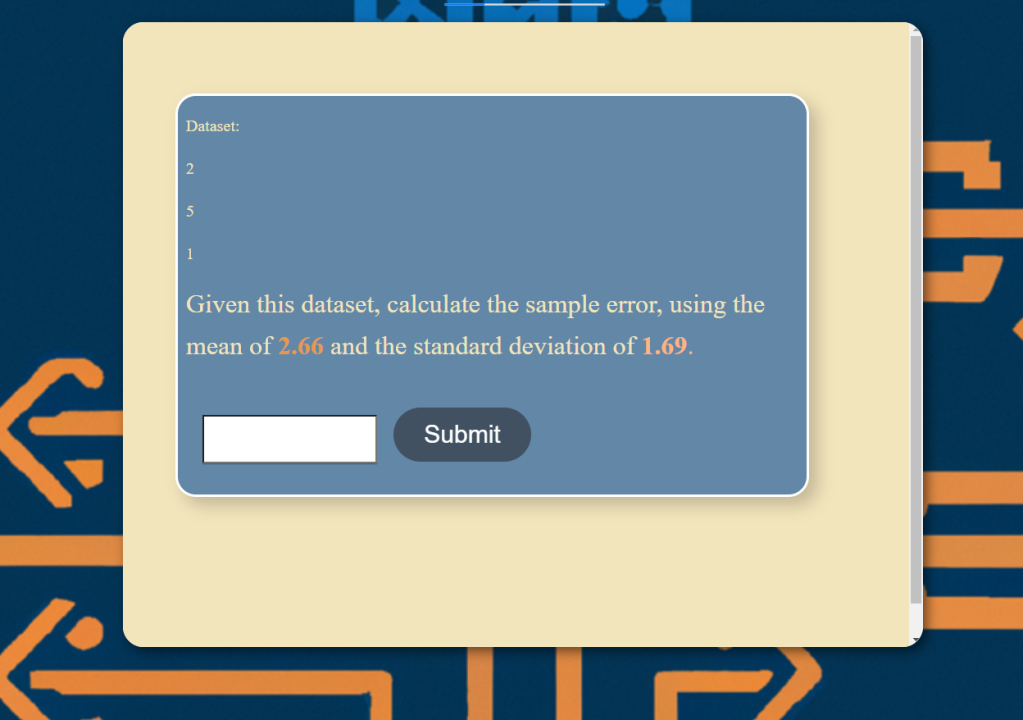

I added code (simple script) to evaluate the learner’s answers based on the scenario rather than answers that are hard-coded to be right or wrong.

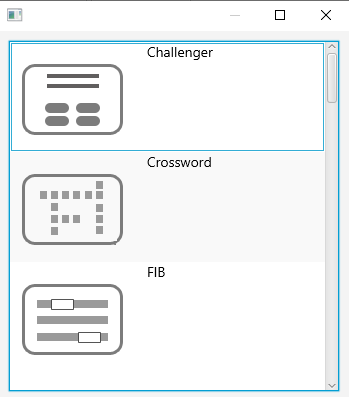

LodeStar provides a simple scripting language that makes it fairly easy to evaluate the learner’s answers. For those designers who wish to avoid scripting altogether then LodeStar offers a Gate page type and branching options. The branch can direct learners to a batch of questions and answer options that are aligned to the scenario.

The use of simple scripting enables the designer to create one engine that will evaluate learner choices based on the scenario. The use of branching enables the designer to create sets of questions and match those sets to each scenario.

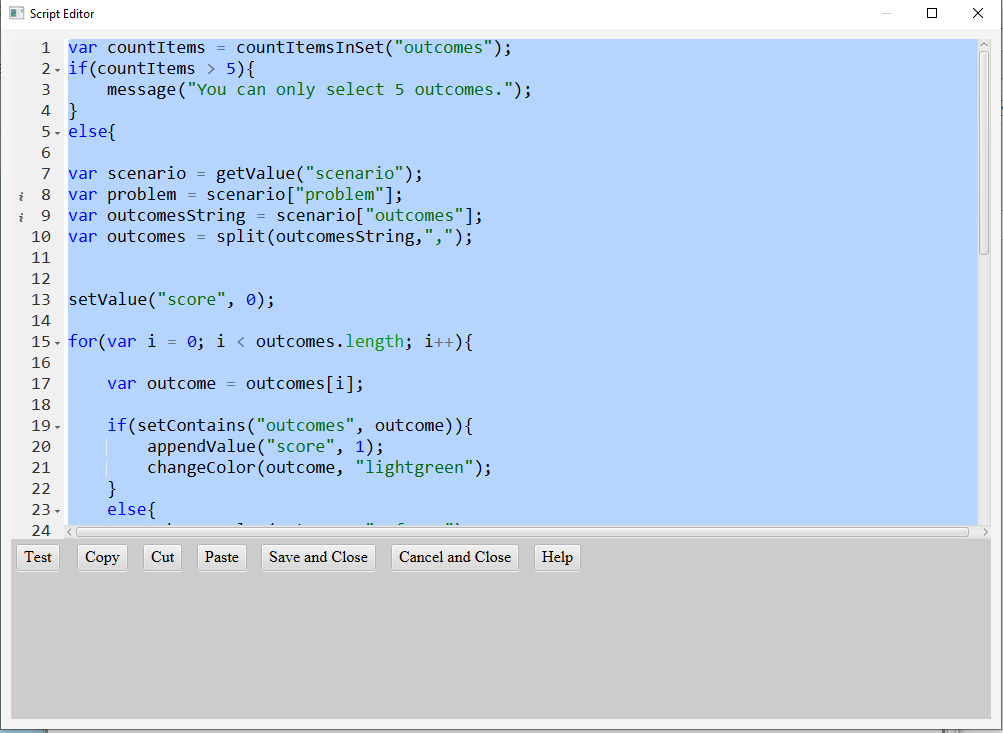

In the example of simple scripting, the code would do the following:

- Ensure that the learner only selected five outcomes.

- Retrieve the dataset and parse out the right learning outcomes for that dataset.

- Loop through the outcomes and check if the outcome is contained in the set of learner’s choices. If yes, append score and change the displayed outcome to green.

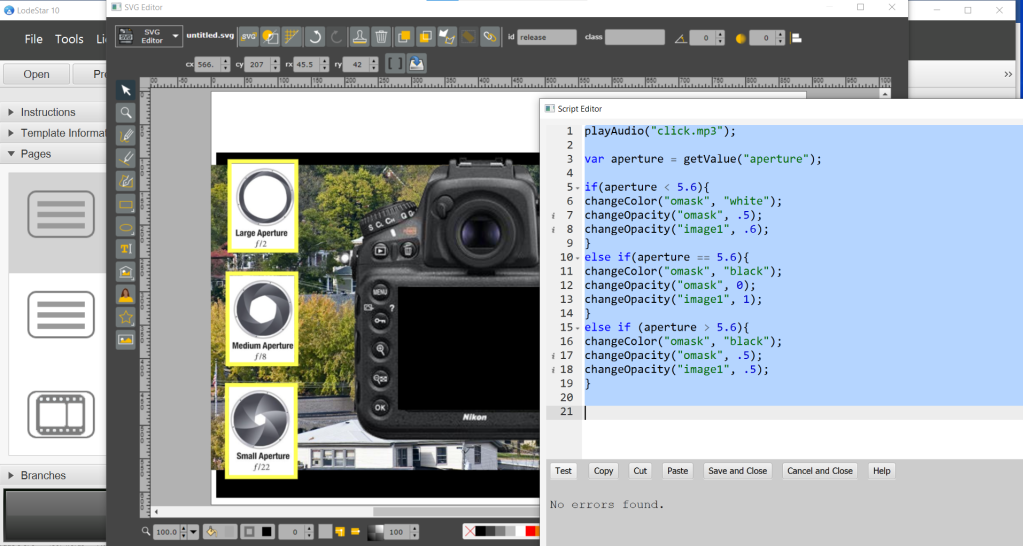

Here is an example of LodeStar Script accomplishing the above. LodeStar Script follows JavaScript in the way that it declares variables, evaluates conditional statements, and loops through an array. But LodeStar Script is not JavaScript. It features high level functions that make it very easy to perform tasks, change properties, animate, play sound and video, and fifty other things.

Trainers and instructors can start with simple branching and slowly build on their scripting ability to increase their power to generate dynamic simulations that change every time the learner returns to them.

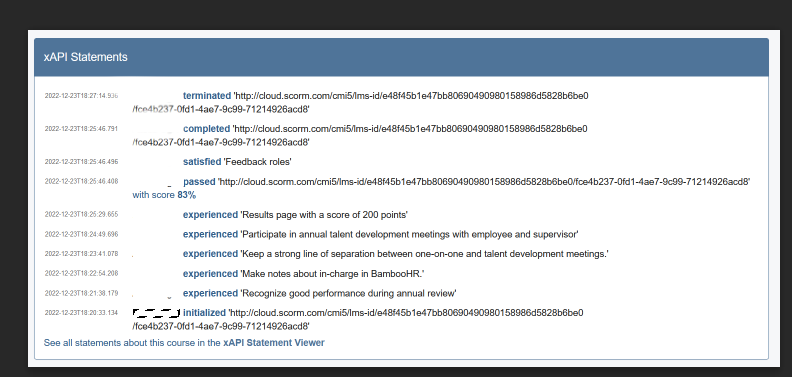

LodeStar Script also supports the generation of xAPI statements as do the branching options.

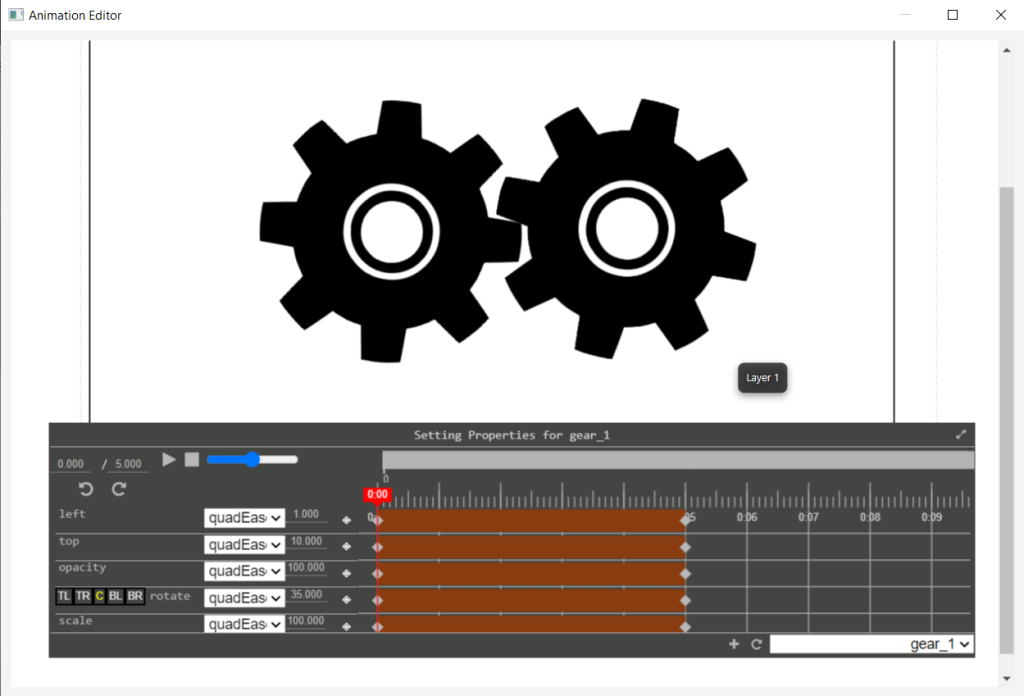

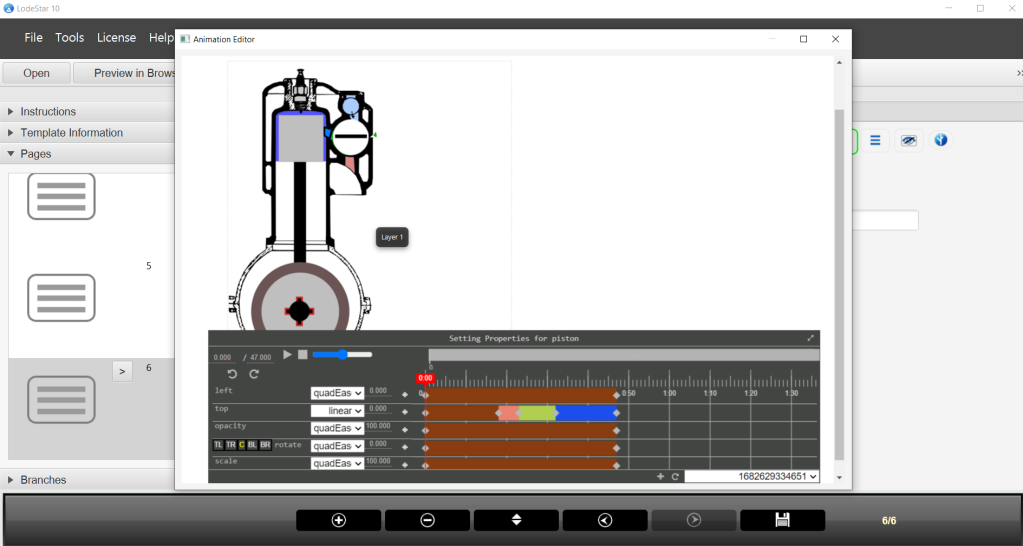

Controlling animations programmatically

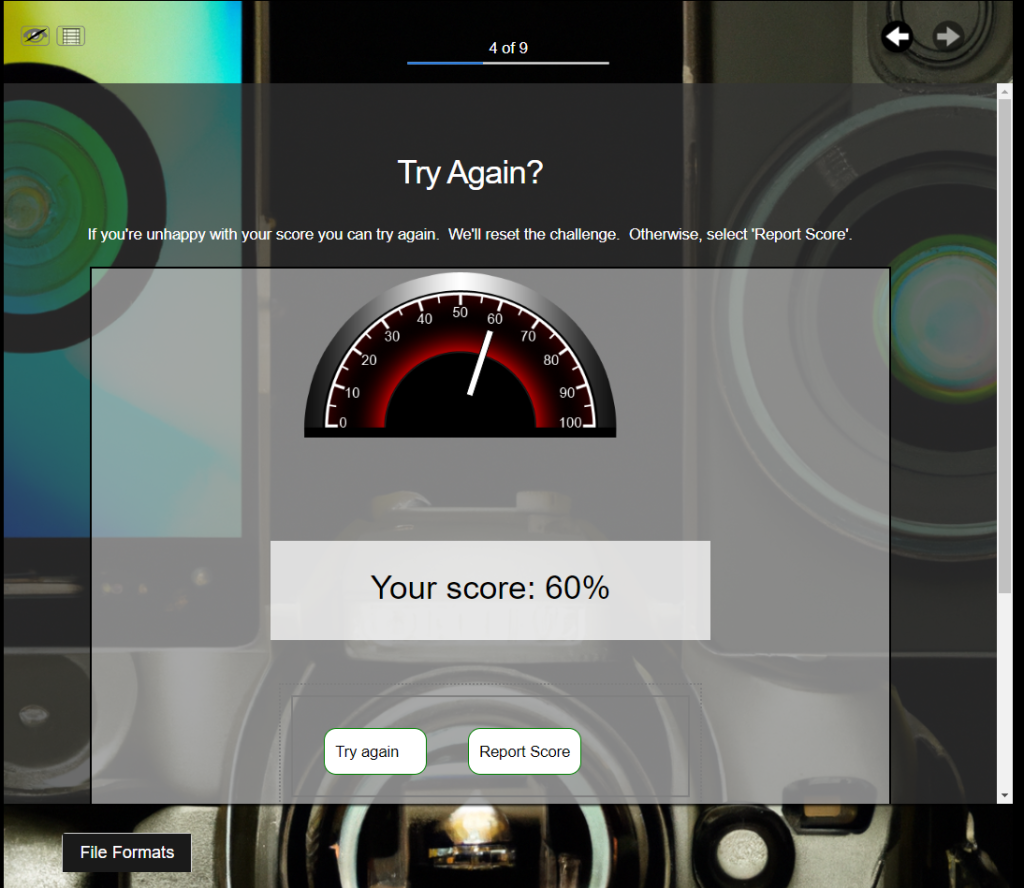

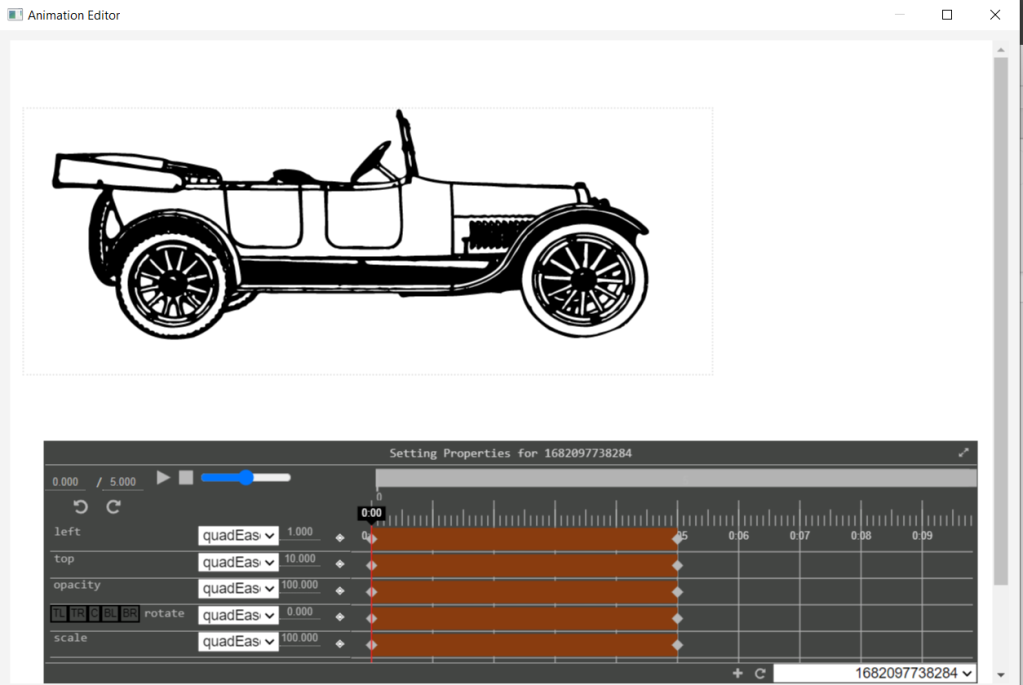

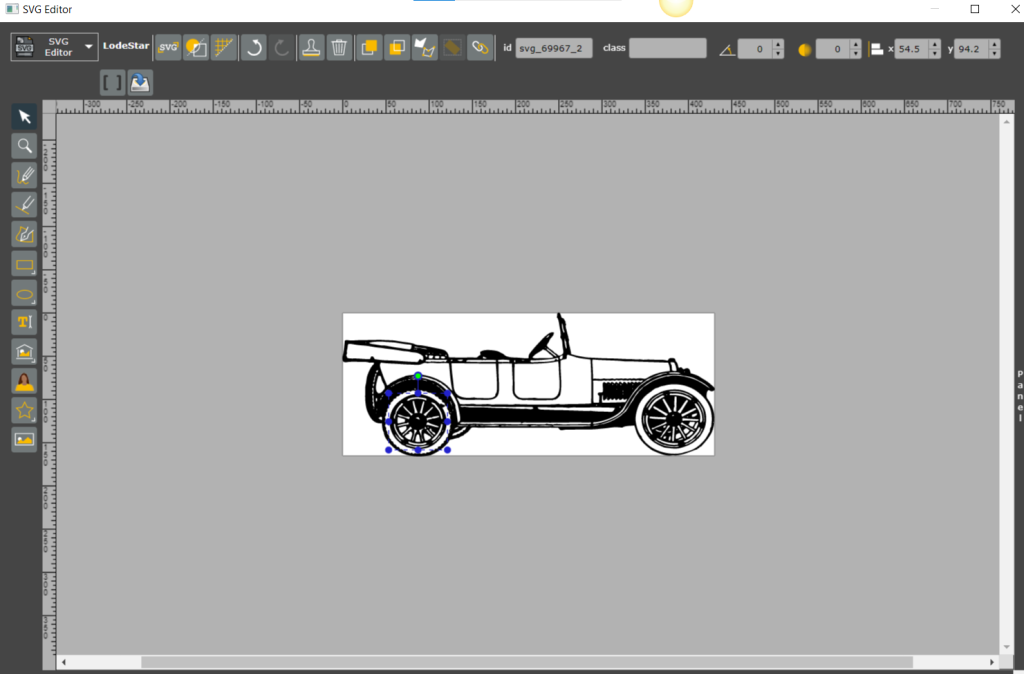

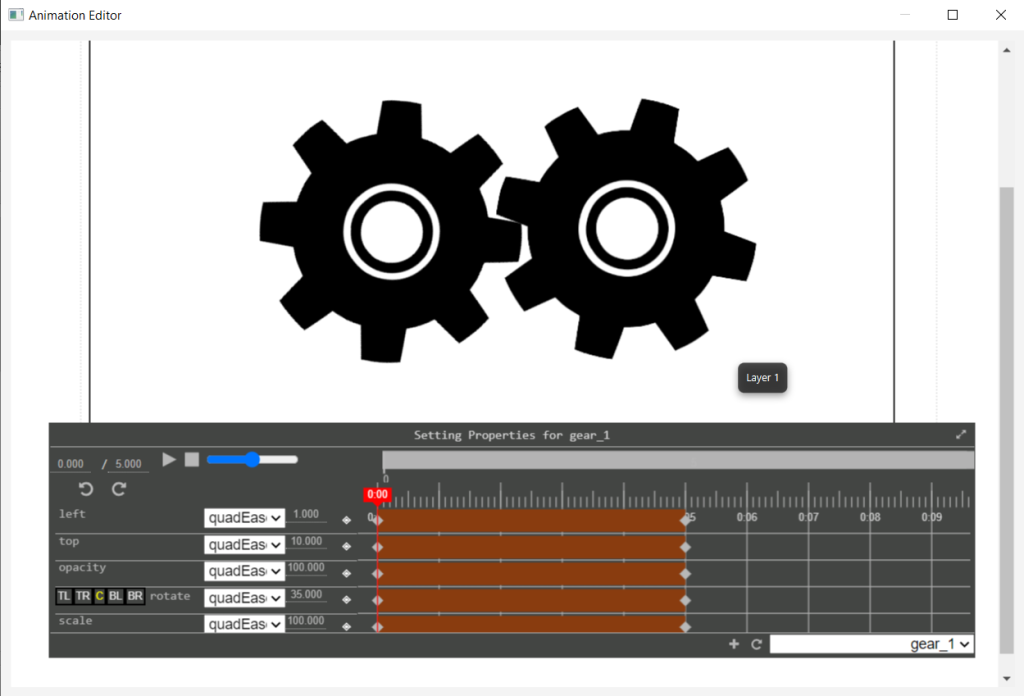

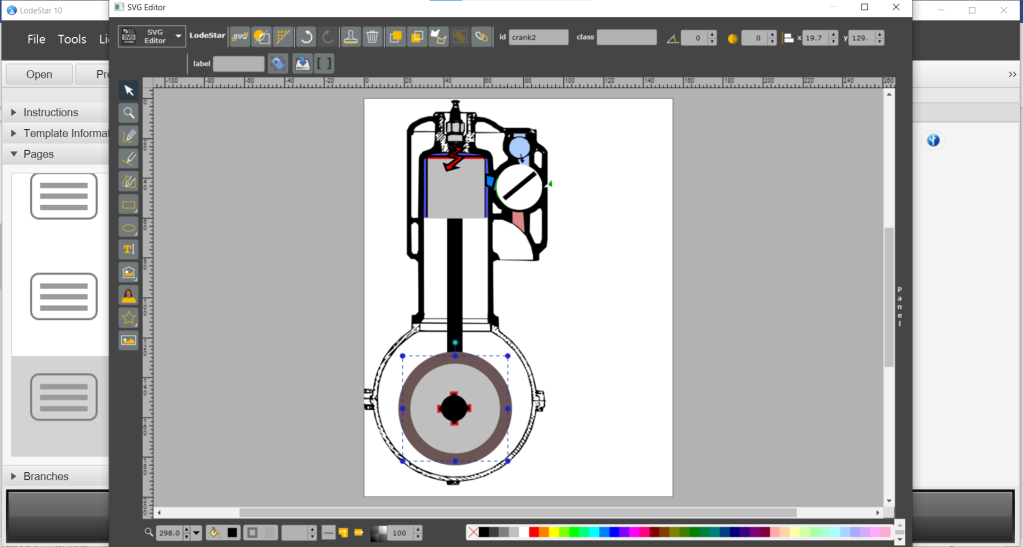

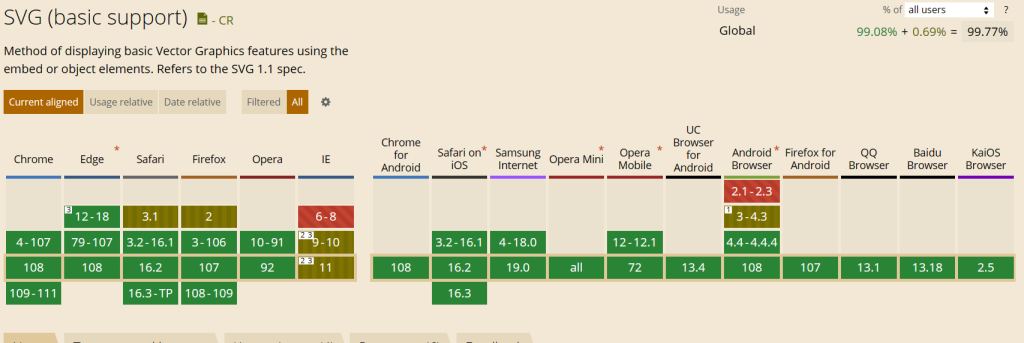

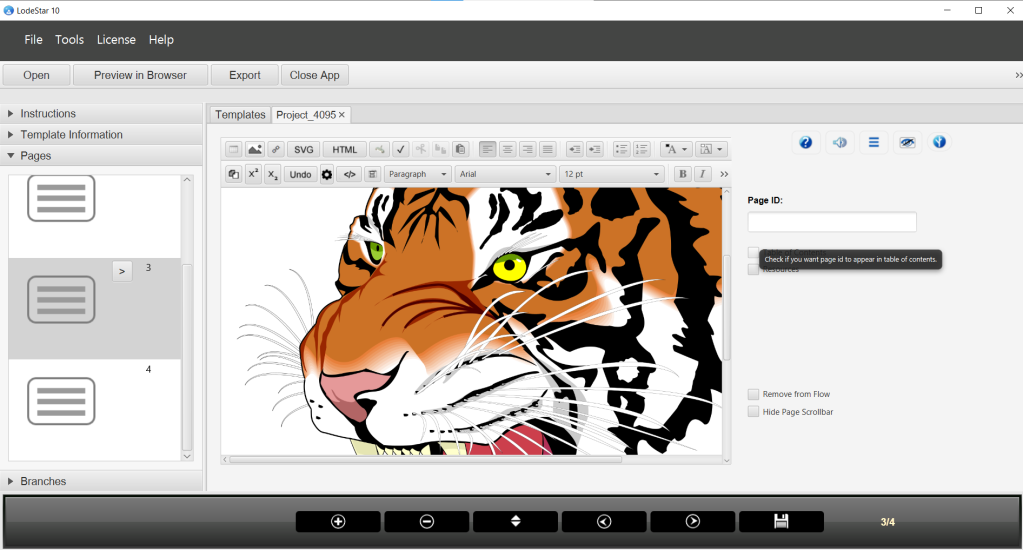

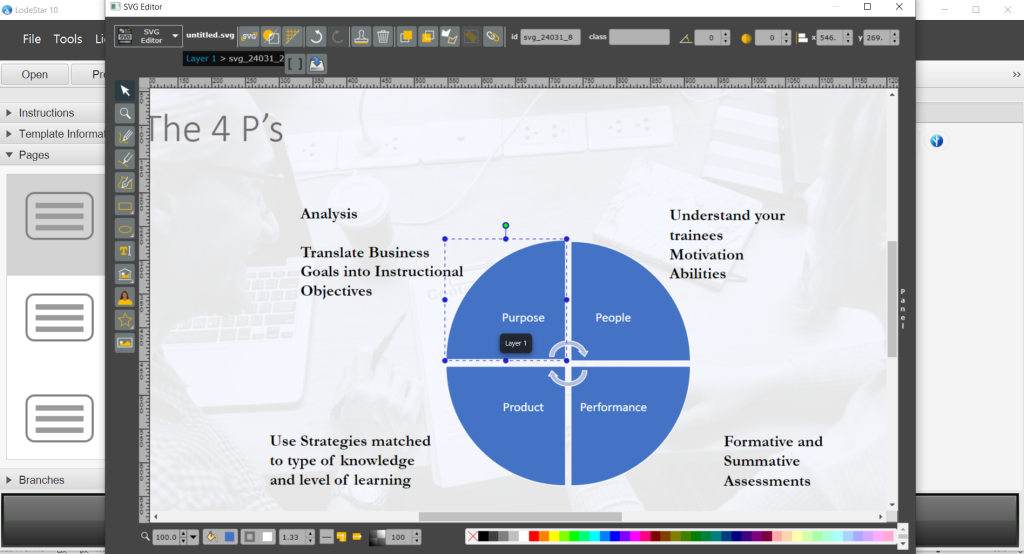

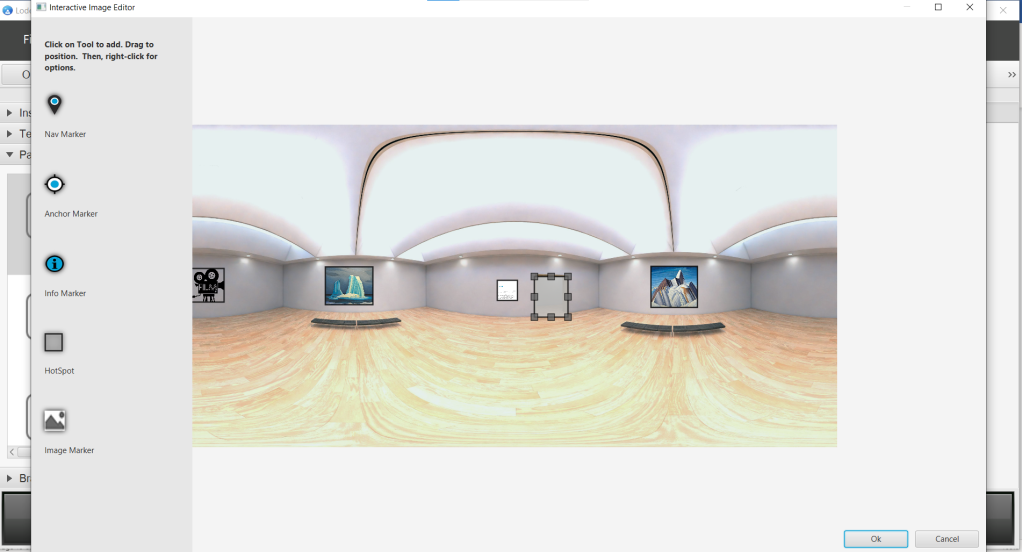

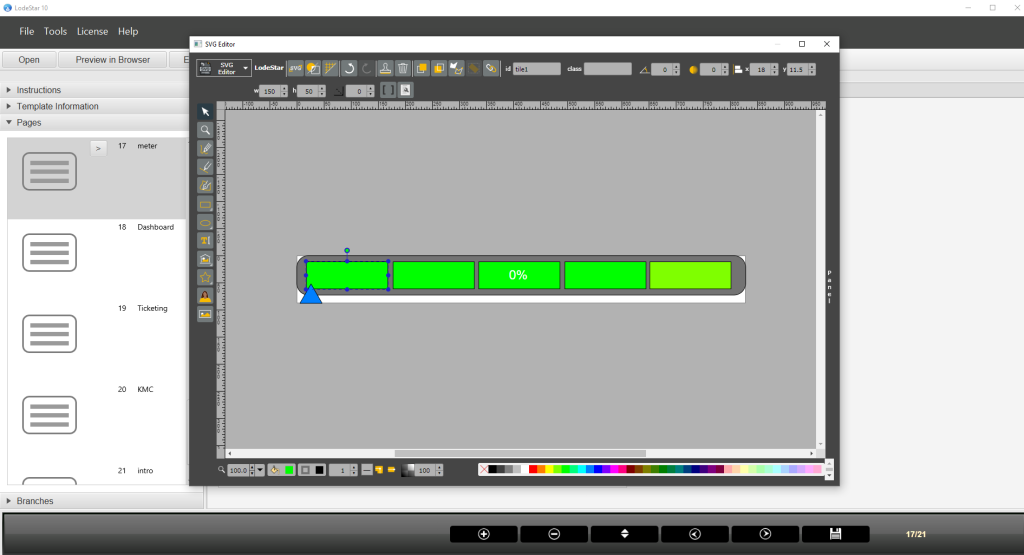

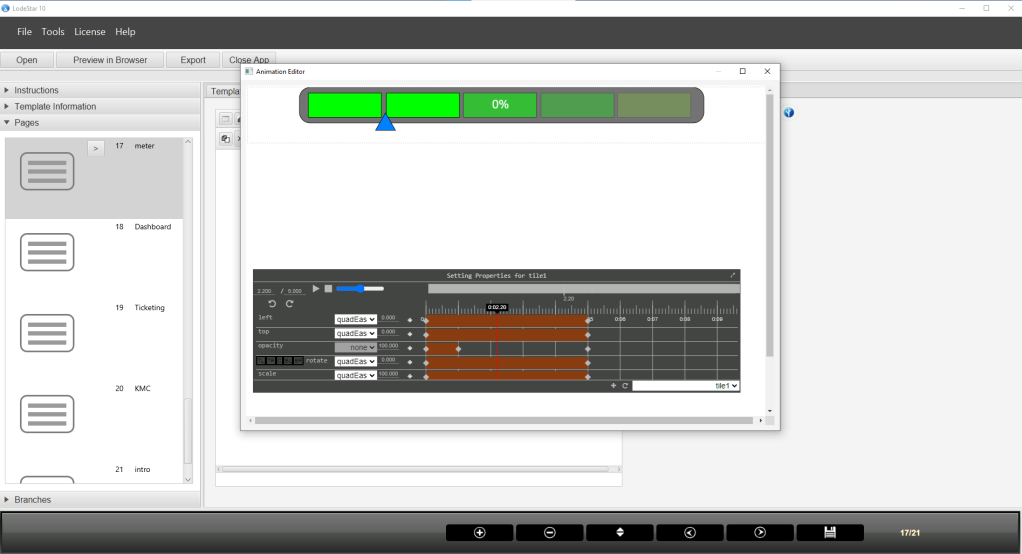

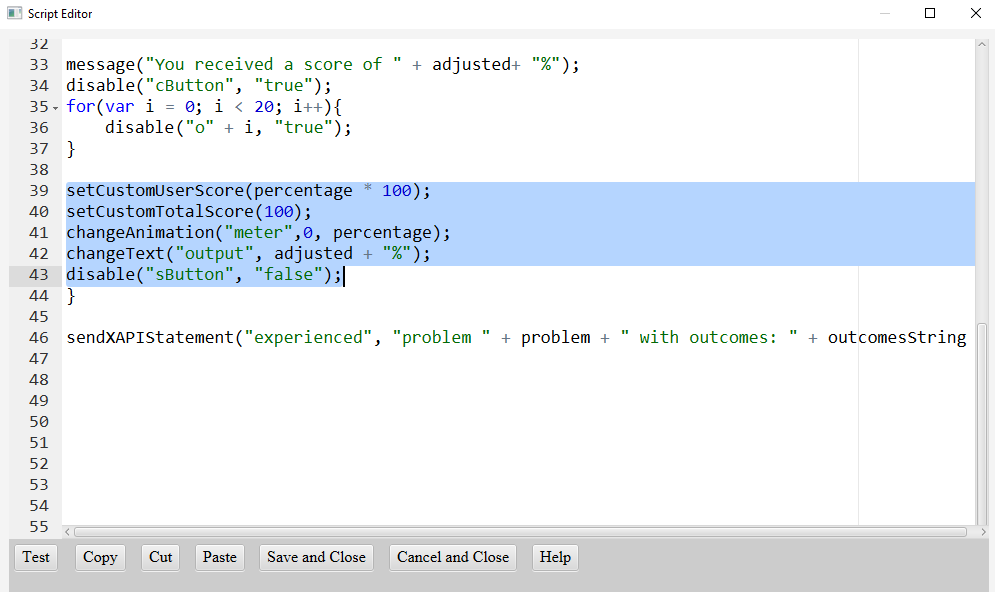

In my simulation, I wanted a visual indicator of how the learner was performing. I created a meter with the SVG editor, an animation timeline with the animation editor, and then controlled the animation with some script. I also programmatically controlled the usage graphs for each of the tools – again, based on the dataset.

Simulations are often supported with animation that can be programmatically controlled. The following screens show how I did this in LodeStar.

First, I created the meter with five bars in the SVG editor. I also added a triangle indicator.

Then I opened the animation editor and changed the timeline for each bar. I made the first bar fully opaque at 1 second, the second bar at 2 seconds and so on.

If a learner performed at 60 percent, the first three bars would be fully opaque. The triangle indicator would appear just right of center.

Now, I needed to control the animation play head. Once I evaluated the learner (user) score I did the following:

- Set the system score for the user

- Set the total score for the user.

- Move the animation playhead to the 60 percent mark with the following function: changeAnimation(“meter”, 0, percentage)

- Change the text to show 60 percent

- Disable the check button.

- Send an xAPI statement to the learner record score that states what outcomes the learner chose for the given dataset problem.

Conclusion

Simulations are an effective tool to engage your audience and promote learning. With artificial intelligence, we can research and write content, generate images, synthesize speech, and synchronize speech to lip movements. With advances in HTML standards baked into authoring tools like LodeStar we can generate datasets and randomly draw from them. With advances in standards, we can create SVG graphics, animate them, and control the animations programmatically.

The biggest challenge is in finding authors who are willing to learn these techniques and use them to engage learners. Thinking back on Christopher Lind and Michael Vaughn’s comments, we can become more than information architects. We can become learning experience designers.

References

- Future-Focused with Christopher Lind

https://christopherlind.substack.com/ - Paul Matthews

https://paul-matthews.com/ - Metropolitan State University, Learner Experience Design Graduate Certificate Program

https://www.metrostate.edu/academics/programs/learner-experience-design-graduate#about