Robert N. Bilyk

President, LodeStar Learning Corporation

Introduction

LodeStar Learning now supports the import and playback of H5P activities in its LodeStar eLearning authoring tool. This is a new feature that is made possible mainly by the ‘openness’ of H5P and its creators.

This article will unpack the importance of this announcement and tease out all the instructional technologies that make it possible. I’ll talk about H5P, its openness, its current uses and briefly describe all of the related technologies. Then I’ll dig into how you can use LodeStar and H5P together.

H5P: What is it?

In their introduction to H5P, the H5P Group (Joubel) acknowledge that

‘There are lots of barriers for creating, sharing, and reusing interactive content. There are copyright issues, technical issues, complex authoring tools and huge problems with compatibility between different types of content formats, authoring tools and publishing plattforms. H5P is breaking down these barriers.’ 1

We’ve heard altruistic rhetoric before from Ed Tech vendors but, in the case of H5P, their claims hold water. The H5P Group has truly broken down a lot of barriers and that’s evident in the open nature of their practices.

For those new to H5P, here is a quick background:

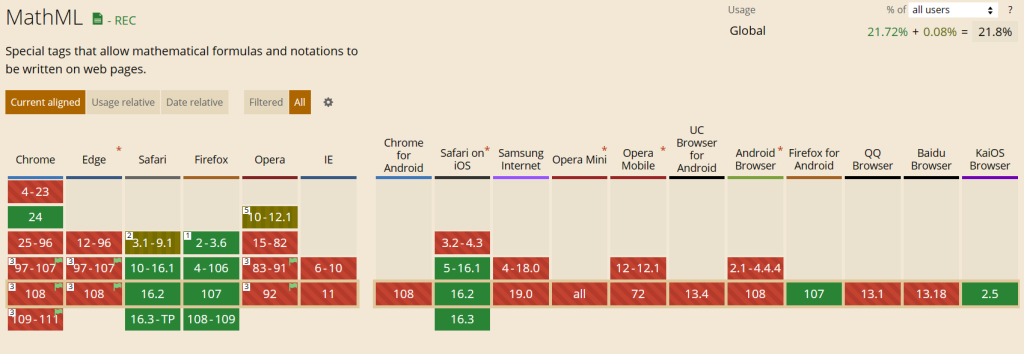

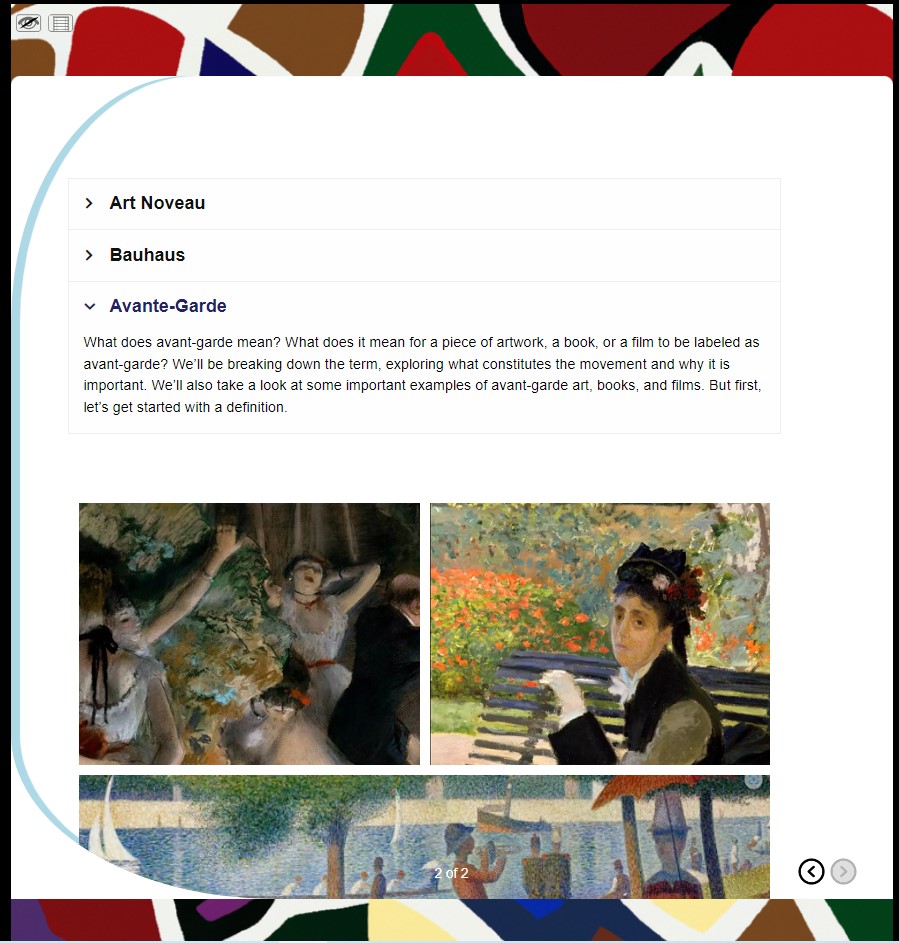

H5P is interactive HTML 5 content presented in the form of quizzes, timelines, accordions, videos, drag and drop, ordering, interactive videos – in total, more than fifty different activities.

H5P playback is supported by plugins for Drupal, Moodle, WordPress and other platforms. H5P content can be housed in the H5P.com software as a service (SaaS) for a fee or, with limitations, content can be authored and housed in H5P.org for free.

Many universities subscribe to H5P.com and support a Learning Tools Interoperability (LTI) connection to the Learning Management System. If you’re new to the concept of LTI, don’t worry. Your learning management system administrator will be well-versed on that technology. It is the primary way that learning management systems integrate publisher material and other add-ons to their system.

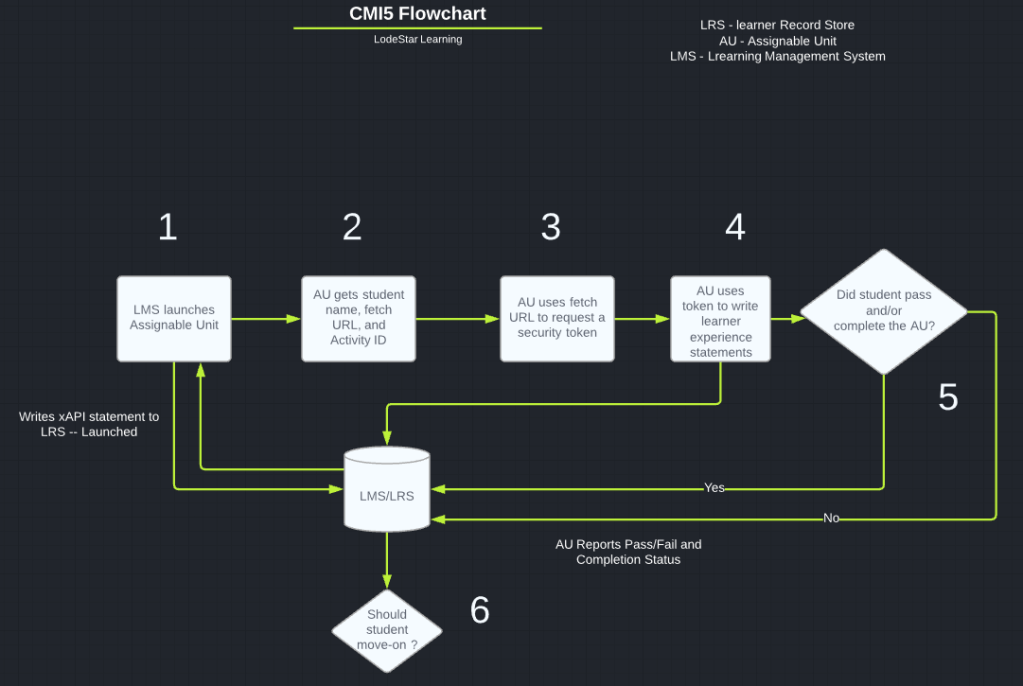

H5P captures and communicates learner performance through xAPI. (I’ll describe xAPI in more detail in the next section.) As of this writing, H5P.com doesn’t include a Learner Record Store (LRS) to capture the xAPI statements but it passes those statements to platforms that may have their own LRS.

About the connecting technologies

If you’re new to the concept of xAPI, H5P is a great way to learn more. For more than twenty years authoring systems like LodeStar, Storyline and Captivate sent learner performance data to their host learning management systems through SCORM. SCORM is a set of specifications that allow authoring systems to a) package the content so that it will run in a variety of LMSs, b) capture learner performance in pre-defined data categories and send that information to the LMS, and c) sequence content.

xAPI, on the other hand, is a far more versatile specification. First, the activity does not need to be housed in the Learning Management System. It can sit in a repository. Activities can communicate learning performance data from anywhere in the internet. Most importantly, xAPI can capture and send anything about the learner performance and not just what fits in pre-defined data categories. xAPI can capture what the learner experienced, attempted, attended, answered, started, completed, passed, interacted, created and more — in short, anything that the learner experienced. And, of course, xAPI can capture and transmit details of how the learner performed.

H5P uses LTI and xAPI technologies.

The openness and modularity of H5P

Much of the code for many of the H5P activities actually sits in GitHub and can be publicly viewed. The significance of this is that H5P is being built by not only a single entity but the instructional community at large.

The H5P architecture is modular. I’ll illustrate the modularity by describing two different activities and their dependencies. One of the activities is a simple accordion display of content. To play back that activity one needs some core libraries plus the following modules:

- Accordion

- AdvancedText

- Fonts

In addition to the core, the libraries weigh in at under 1 MB.

A sorting exercise is a little more complex. It needs:

- Sort Paragraph

- Fonts

- JoubelUI (for pop-up boxes and other user interface elements)

- Transition

- Question

The two activities together weigh in at 1.6 MB.

When an author imports an H5P activity into LodeStar, LodeStar handles all of the dependencies automatically.

In addition to the playback of H5P activities, the H5P package also includes the code that supports the editing of the activity. As of this writing, LodeStar only supports the playback of H5P activities and therefore discards the editing code. Authors can easily make changes to their H5P activities in the H5P cloud and re-import into LodeStar.

The H5P activity follows a very specific open format. The H5P.json file lists the main library of code and all of its dependencies (code that the main library needs). A content folder holds media such as images and the content.json file that holds the content that loads into the activity. In the case of a set of questions, content.json holds each of the question stems and all of the answer options.

The combination of all of these things enables the community of developers to support H5P. The code behind the activities sits in a publicly visible GitHub repository. The .h5P file format is simply a zip file that follows a specific folder structure and includes the h5p.json and content.json that I mentioned earlier.

Downloading an H5P

I’ve been at this game for a good number of years. So often I’ve listened to vendors describe the ‘openness’ of their systems or outline an ‘exit’ strategy should you choose to switch away from their platform, only to discover that content is, in effect, trapped.

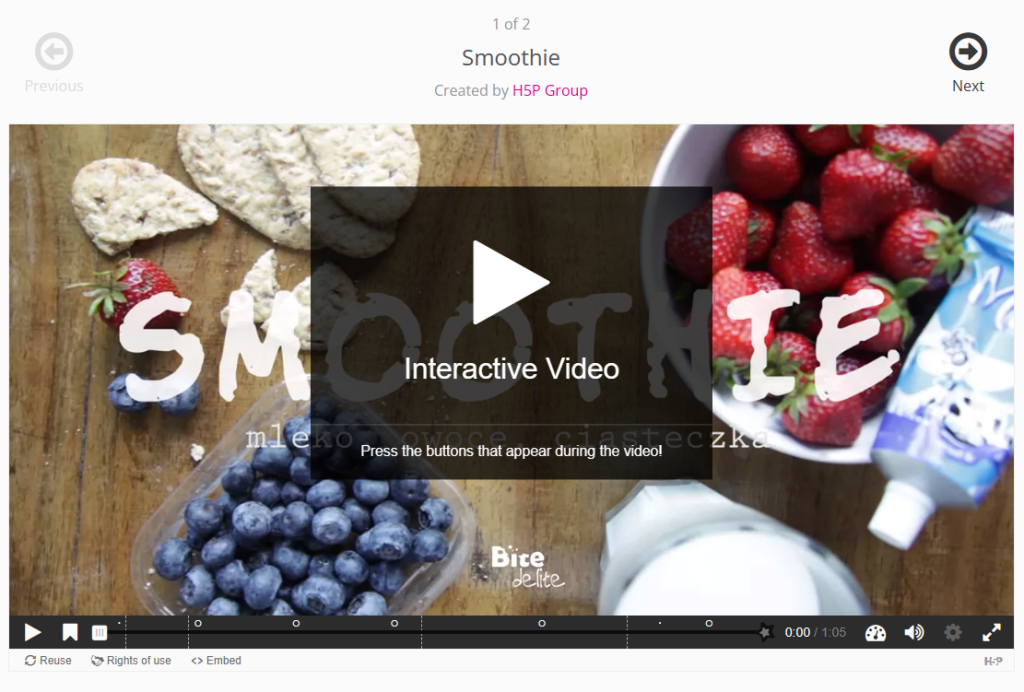

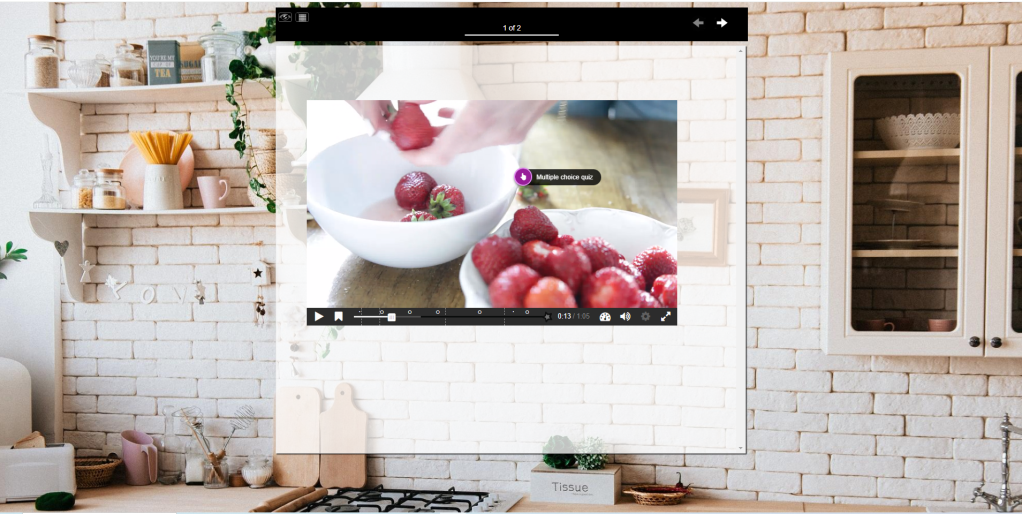

H5P.com and H5P.org offer a ‘Reuse’ button that sits just underneath the content player. In this example of an Interactive video found at Interactive Video | H5P , when I click on the Reuse button a dialog offers the option to ‘Download an .h5p file.’ Again, the .h5p format is simply a zip file that contains all of the code that I need.

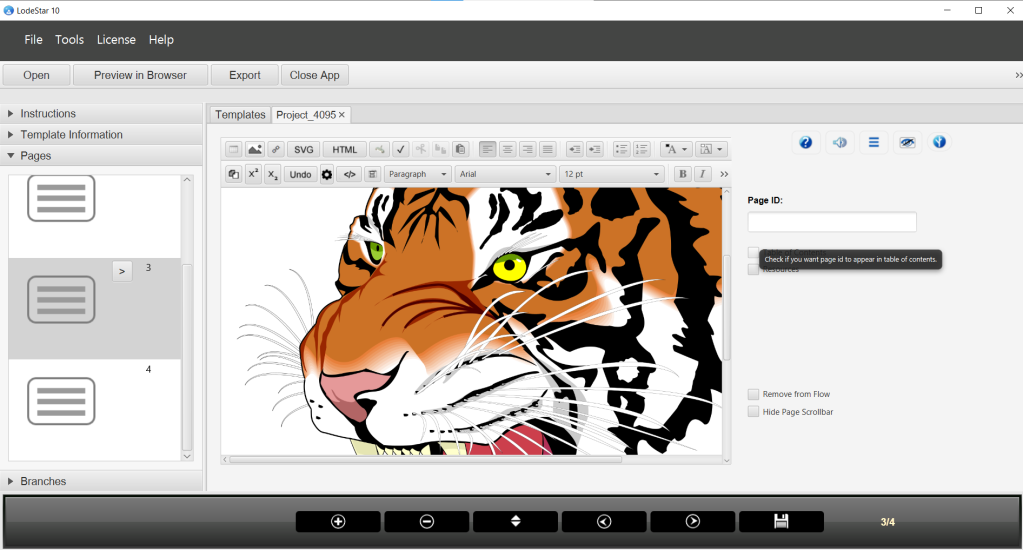

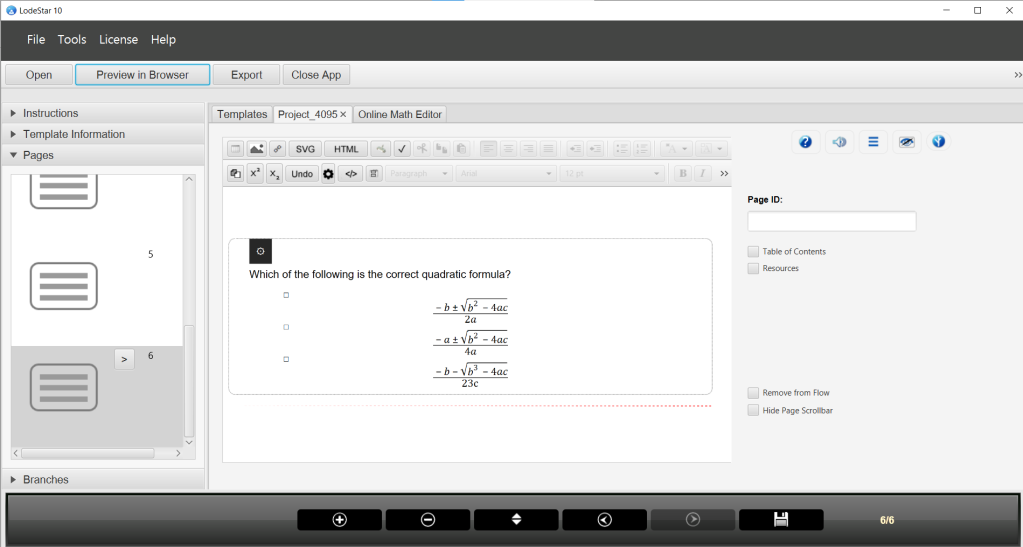

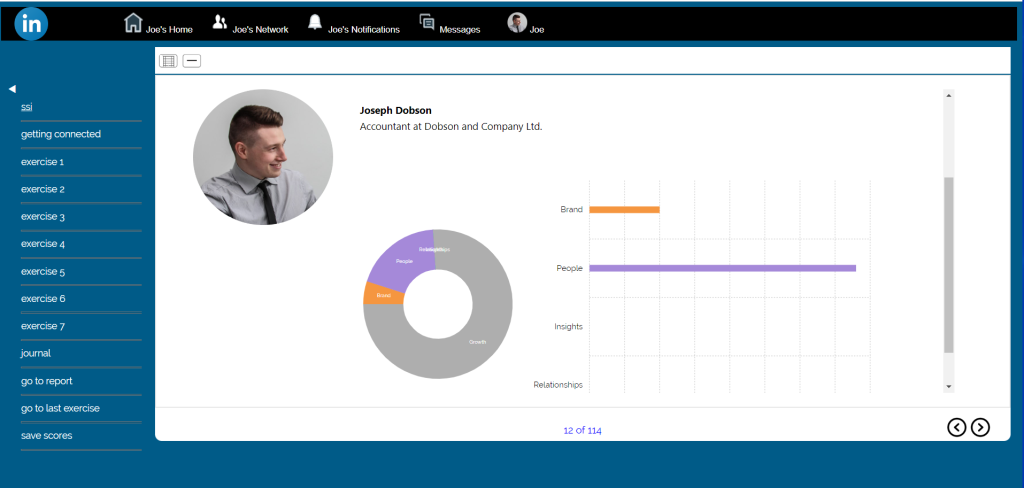

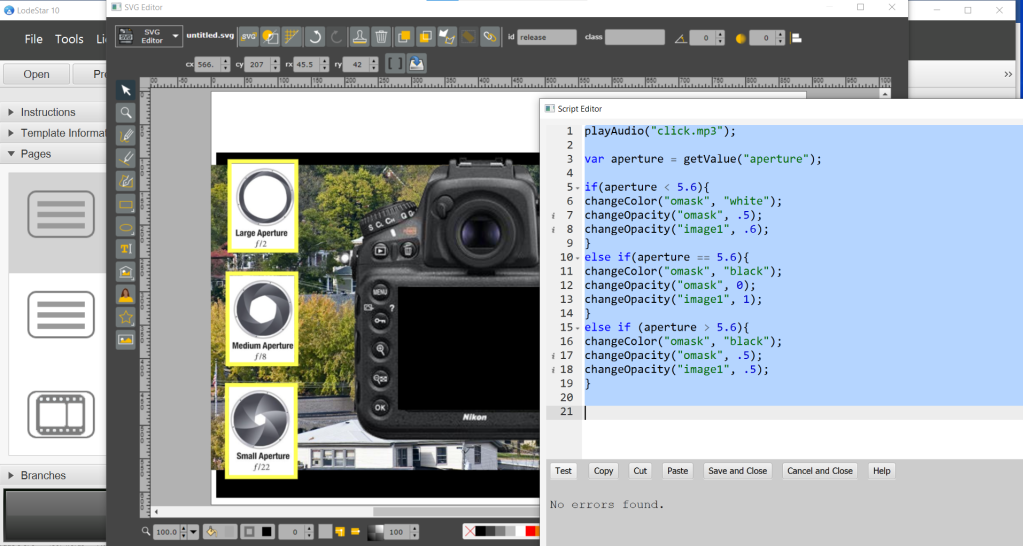

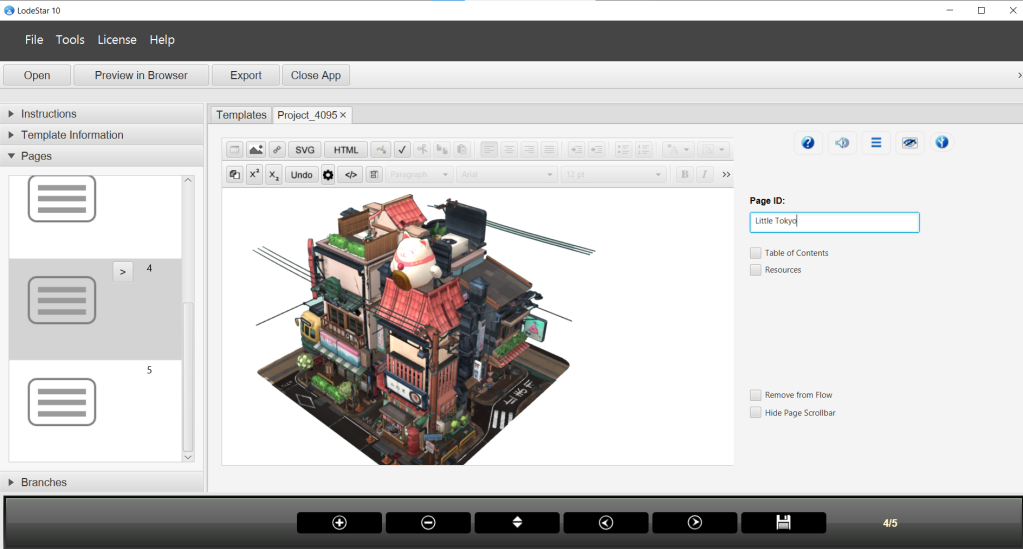

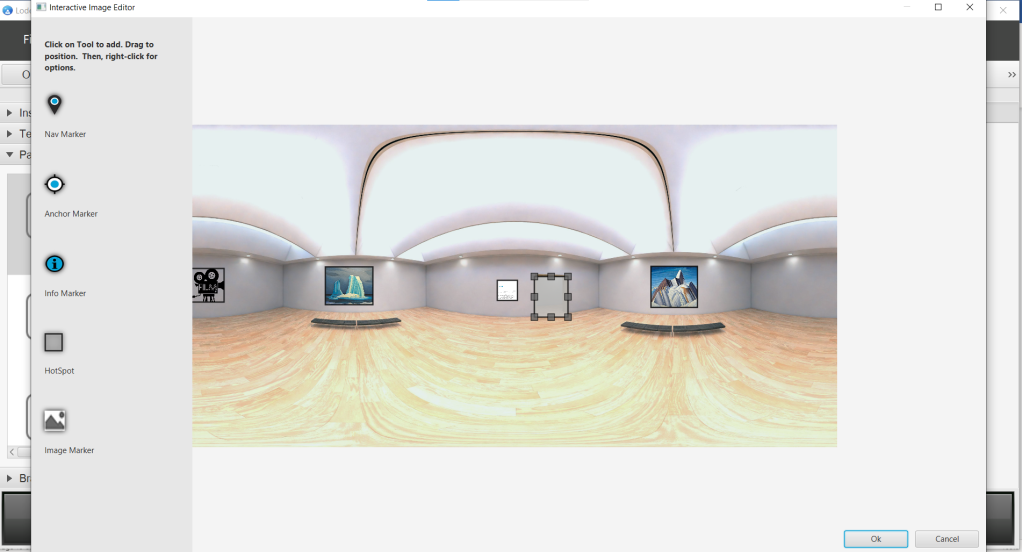

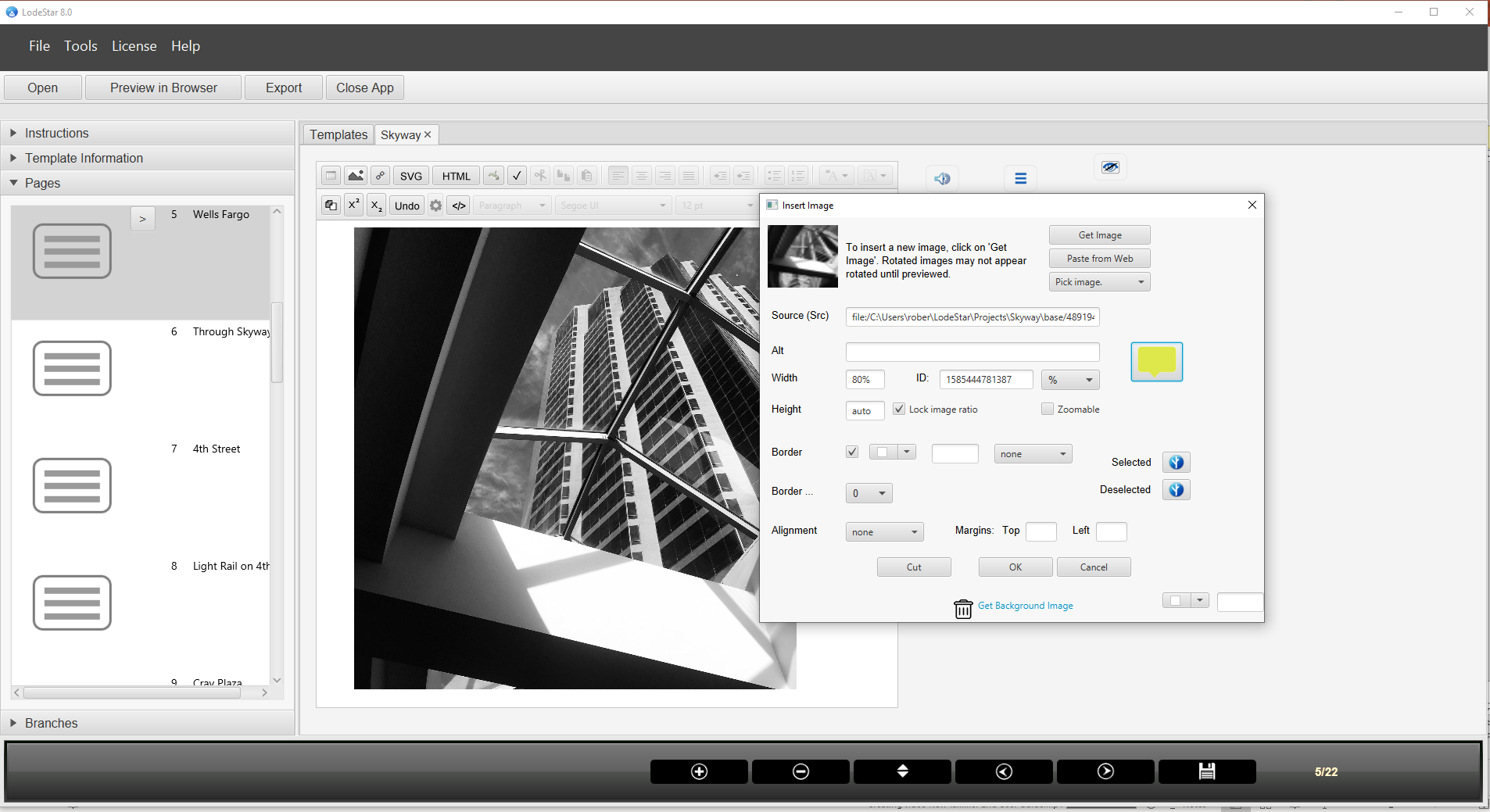

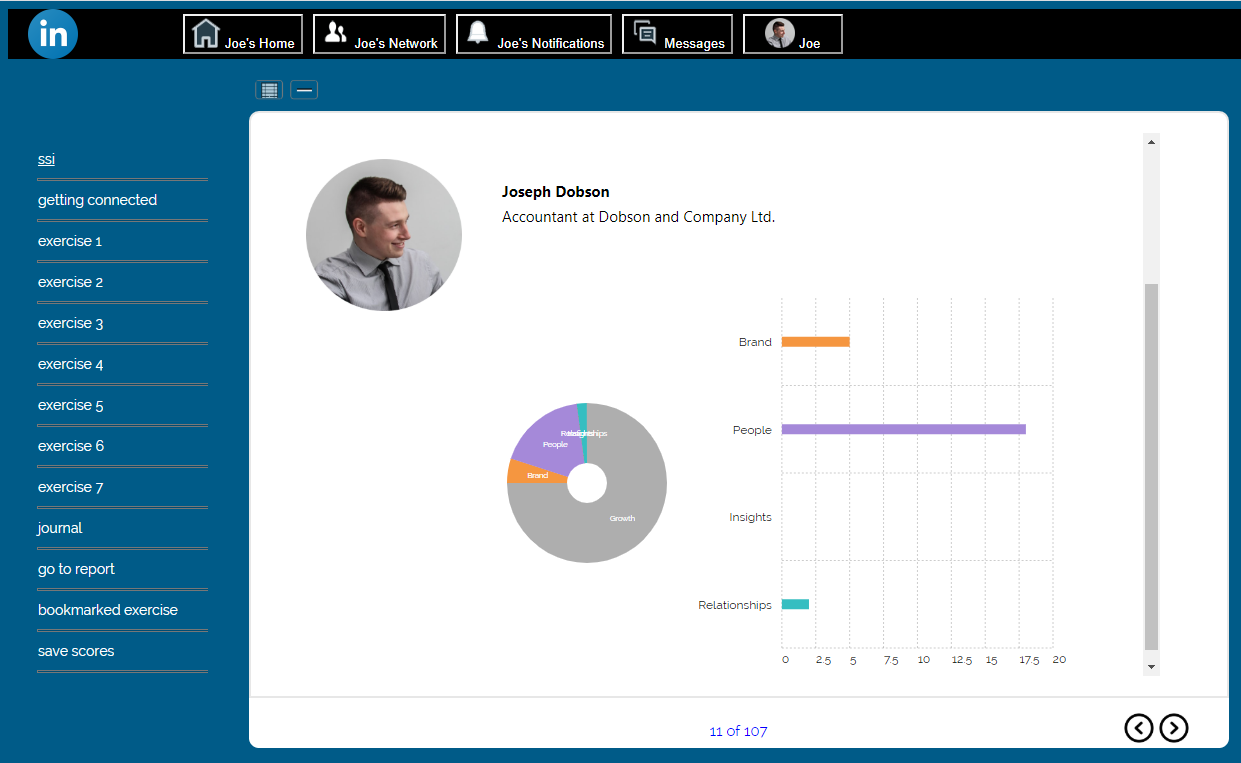

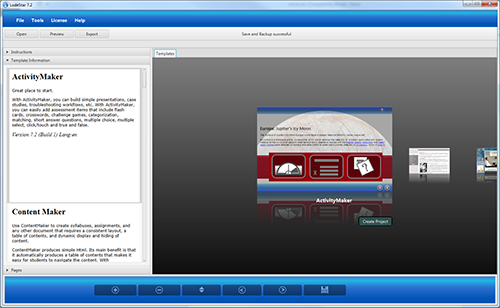

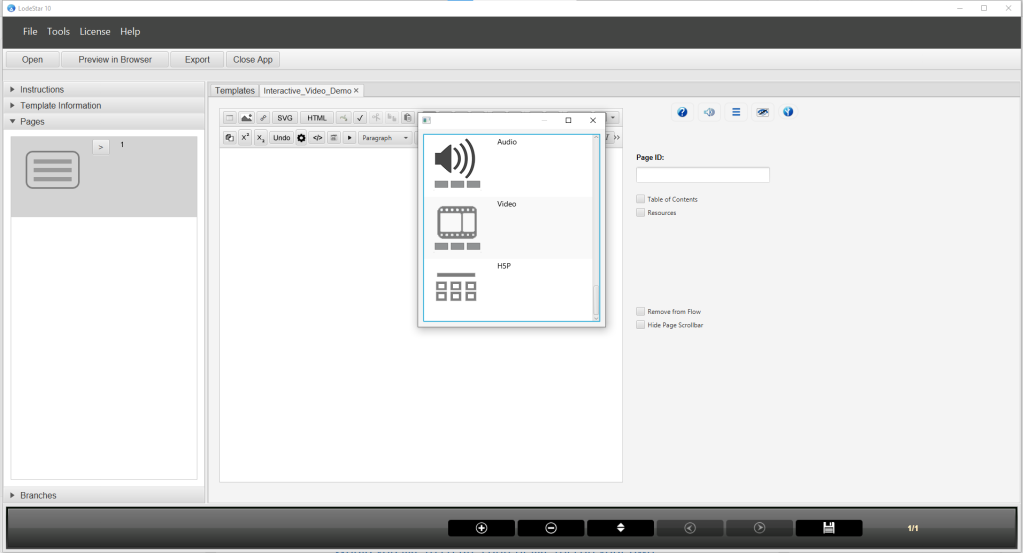

So let’s see what we can do with the Interactive Video Activity in the LodeStar Authoring tool. In LodeStar 10 Build 14 (or later), I selected the ActivityMaker template, created a new page and then clicked on the Widgets sprocket in the HTML editor.

Since LodeStar 10 Build 14, the list of widgets includes the H5P widget.

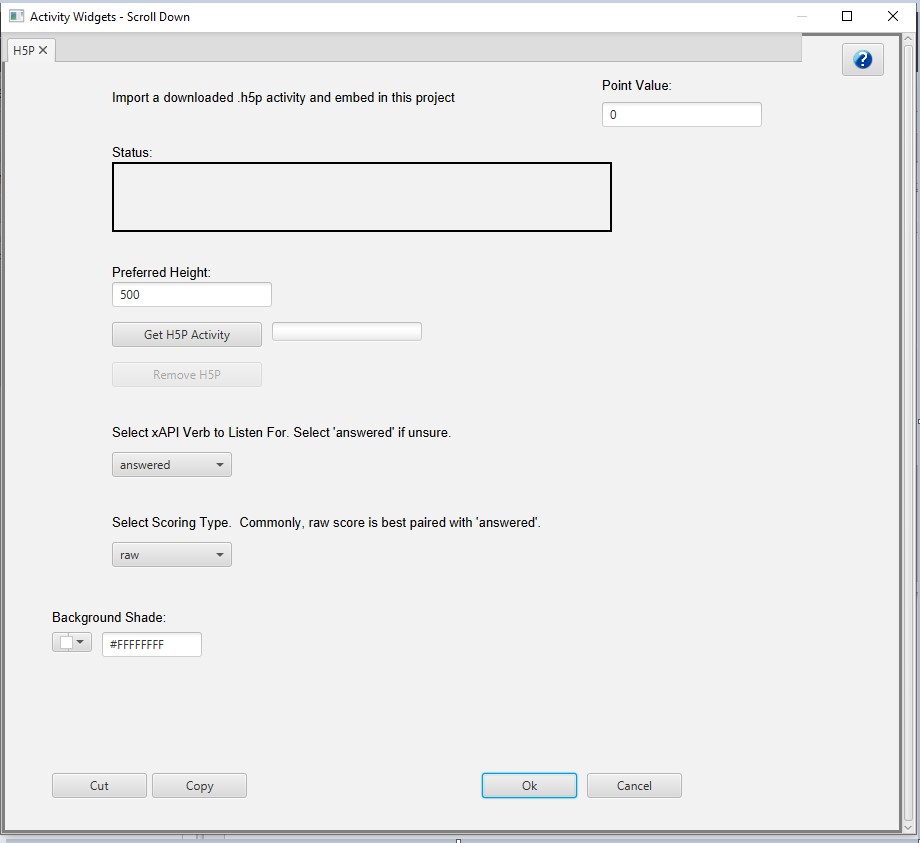

I select the widget and get this dialog:

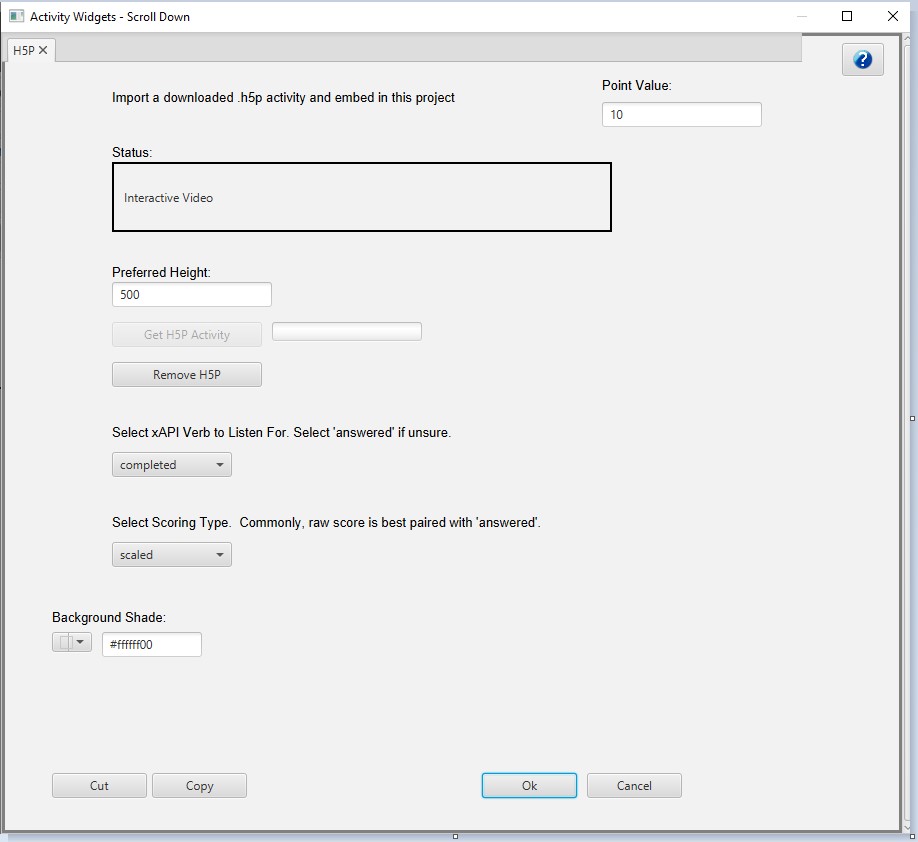

The H5P widget dialog enables me to import a downloaded .H5P activity. I click on ‘Get H5P Activity’ button and select the previously downloaded Interactive video activity.

LodeStar then extracts the contents of the H5P file and looks for the list of dependencies in the h5p.json file. Our program then transfers all of the needed dependencies into a common library so that multiple H5P activities can draw from the common library. In other words, only the files that are needed are added to the LodeStar content package payload.

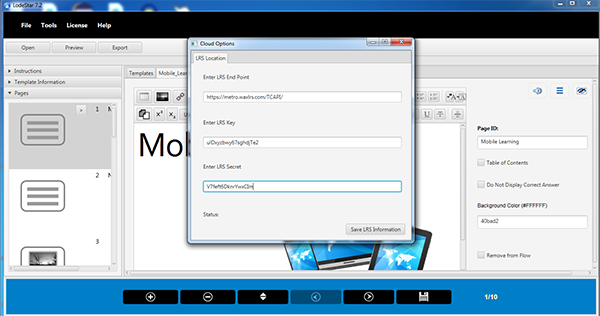

Once LodeStar has imported the .h5p activity, I set the following properties as needed:

Point Value: 10

Preferred Height: 500

xAPI Verb: completed

Scoring Type: scaled

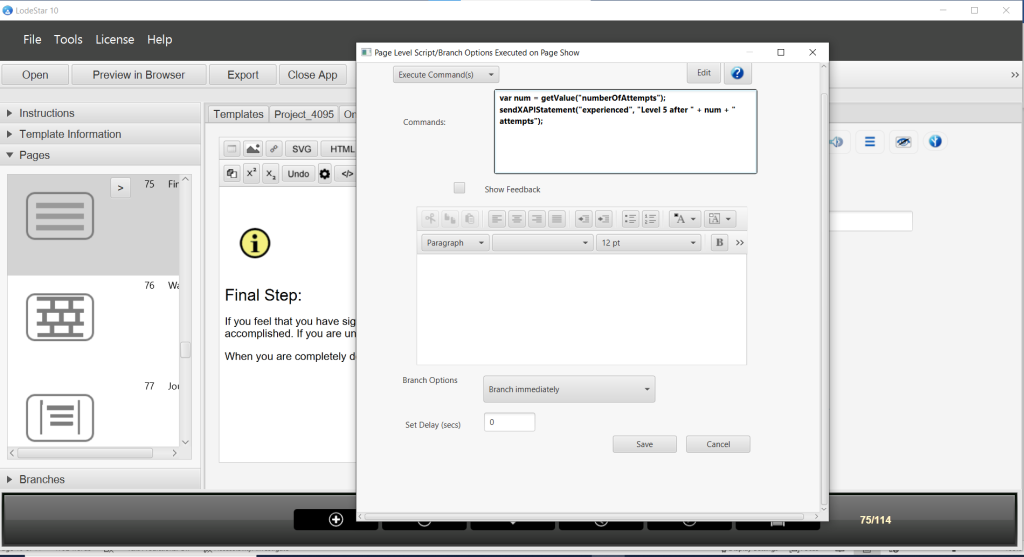

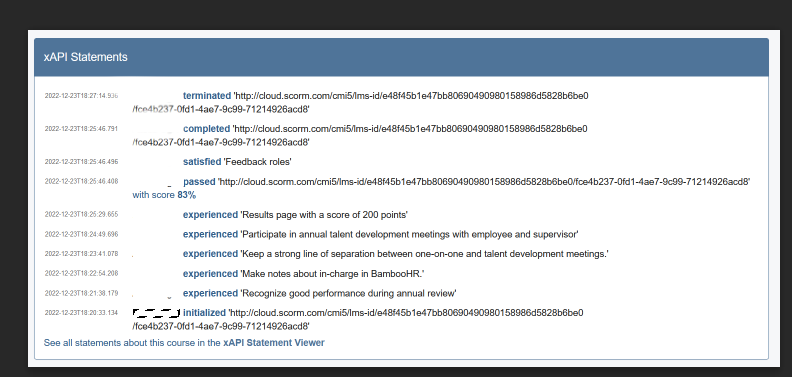

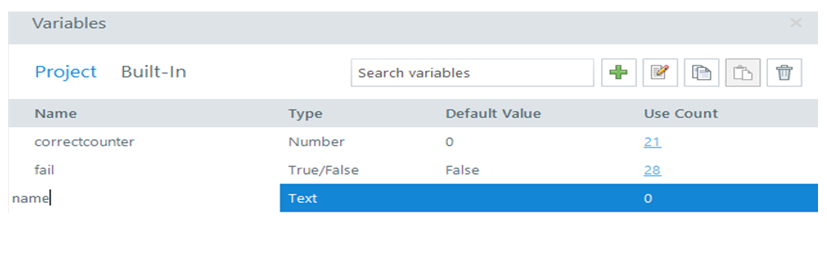

Selecting the correct xAPI verb and scoring type needs a little explanation. First, it is important to note that all xAPI statements generated by the H5P activity are forwarded to the Learner Record Store. Everything is captured and forwarded.

In addition, for scoring purposes, LodeStar listens to the xAPI events that use the verbs ‘answered’ and ‘completed’. LodeStar then integrates xAPI performance data into its own reporting mechanism.

For each of these, there are two choices for scoring method: raw (meaning raw score) or scaled (meaning a percentage from 0 to 1). If raw is chosen, the raw score is added to the accumulated points. If scaled is chosen a percentage of the total points is assigned.

Appendix 1 dives deeper into two scenarios that capture H5P scores differently.

Benefits

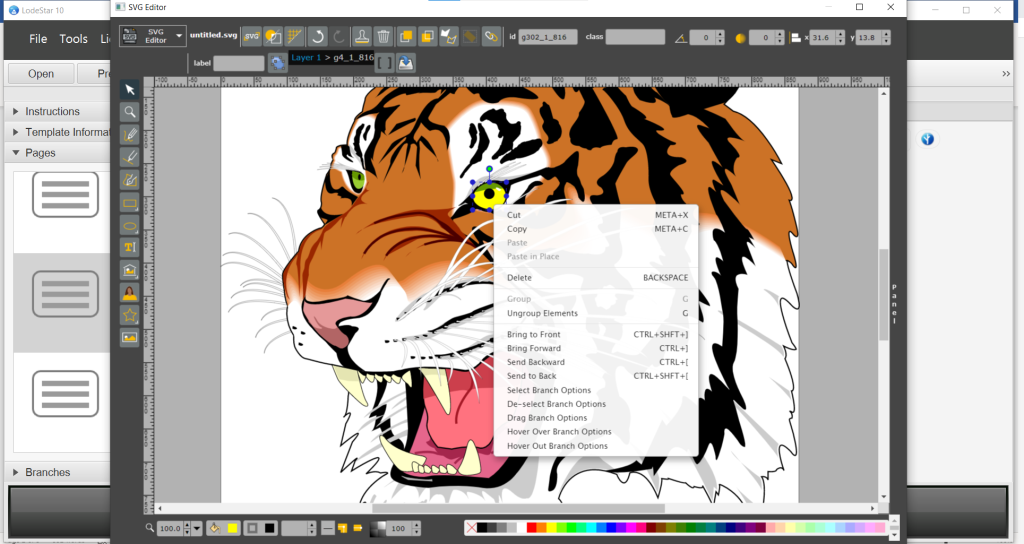

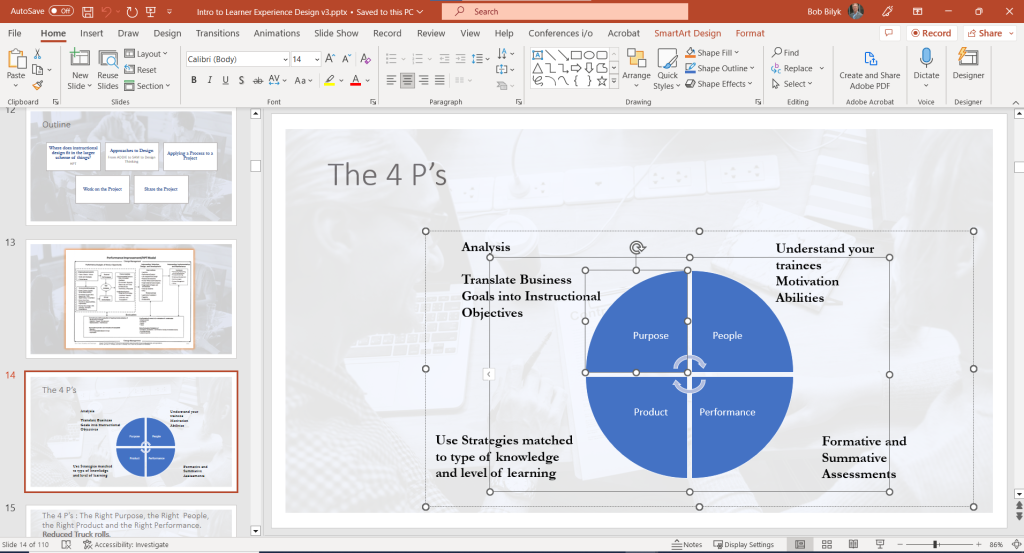

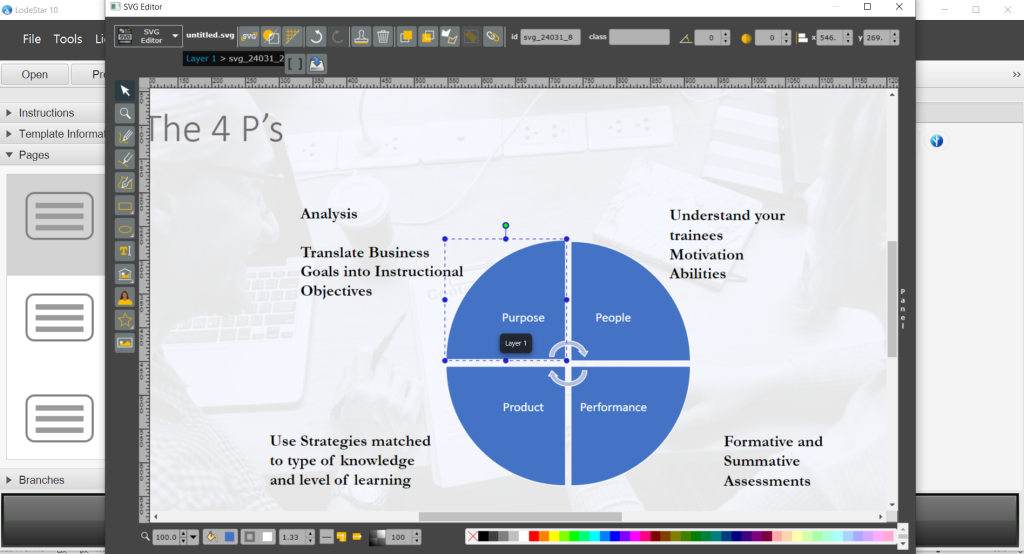

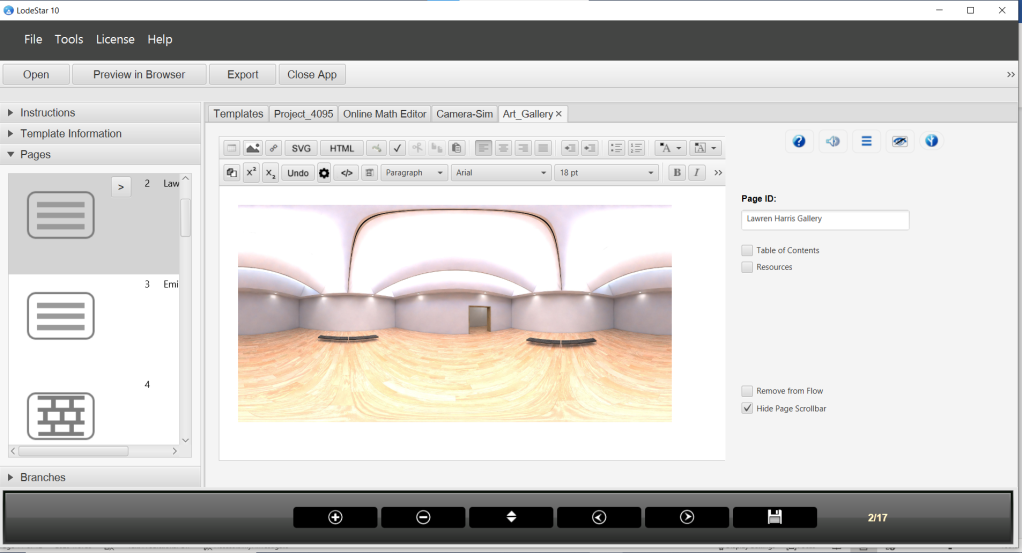

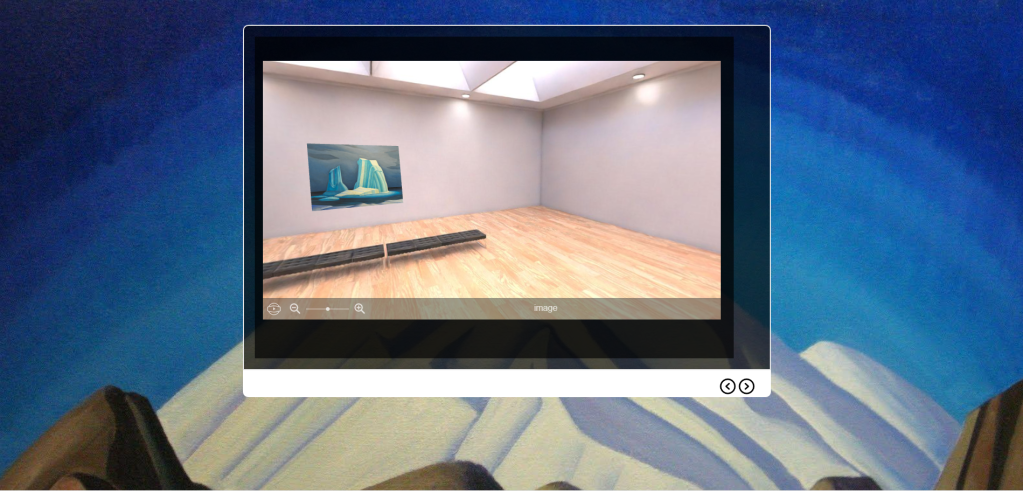

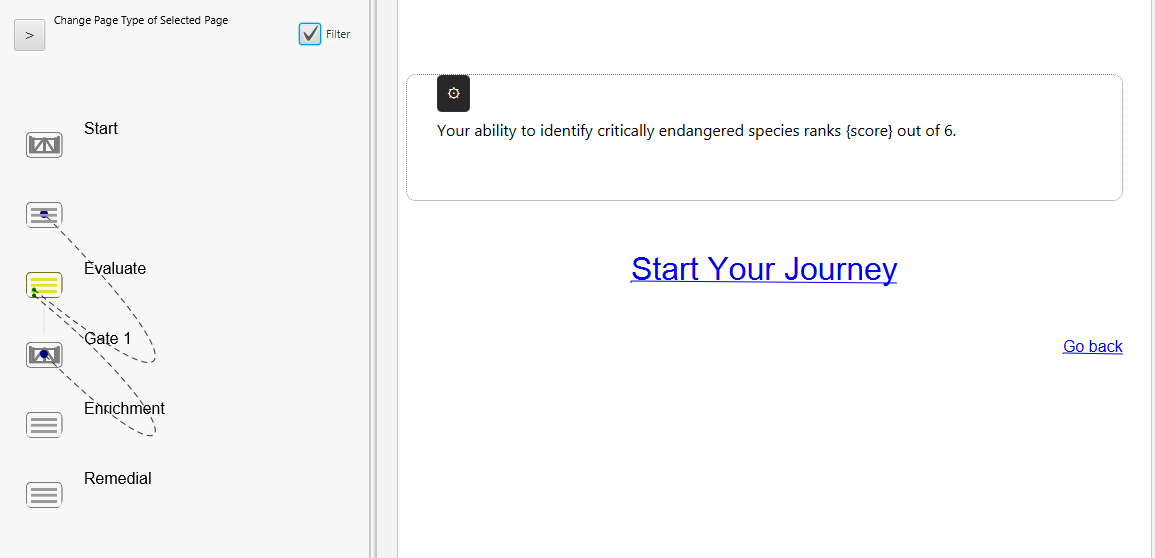

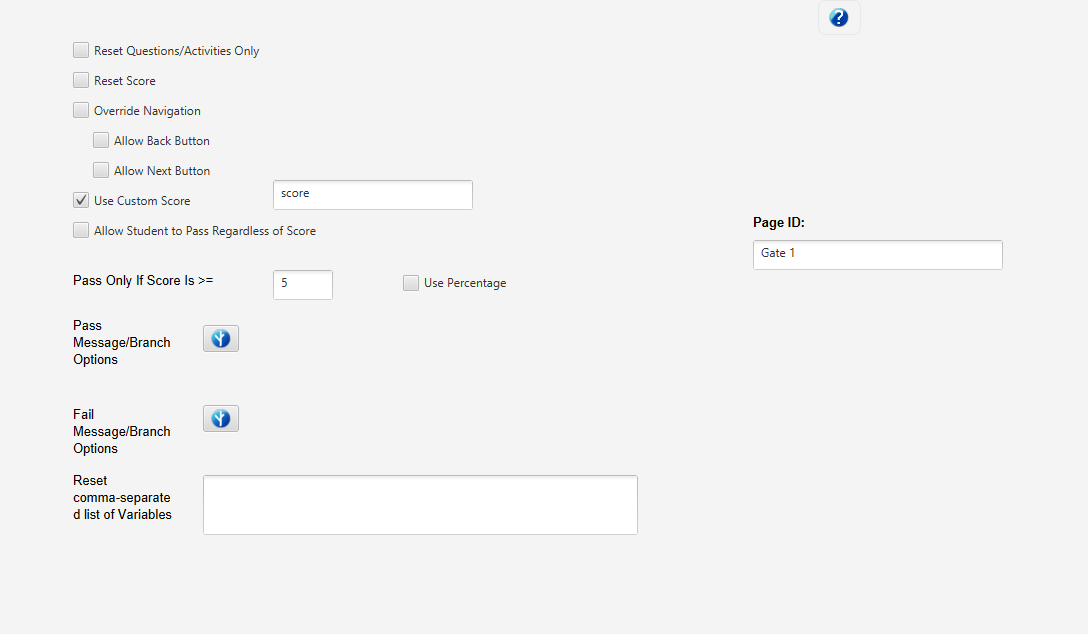

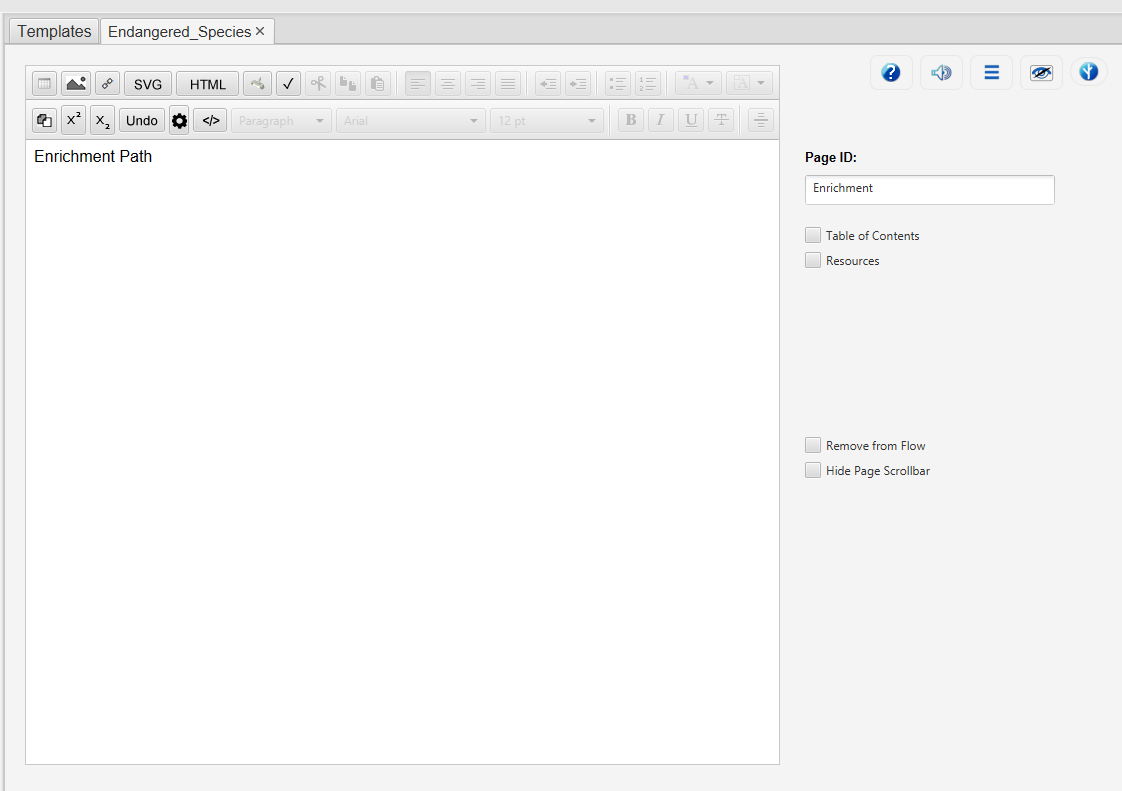

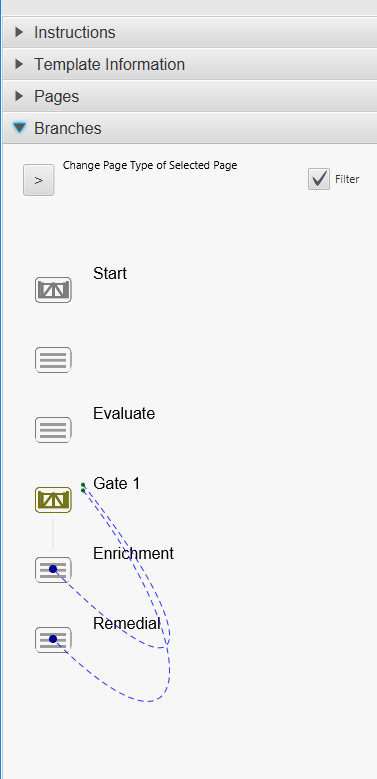

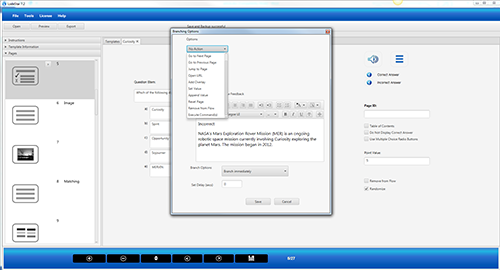

H5P offers a wide variety of activities. LodeStar offers a rich framework that supports branching, SVG graphics, animation, a library of functions, and a variety of page types and widgets. The combination of the two technologies is truly synergistic.

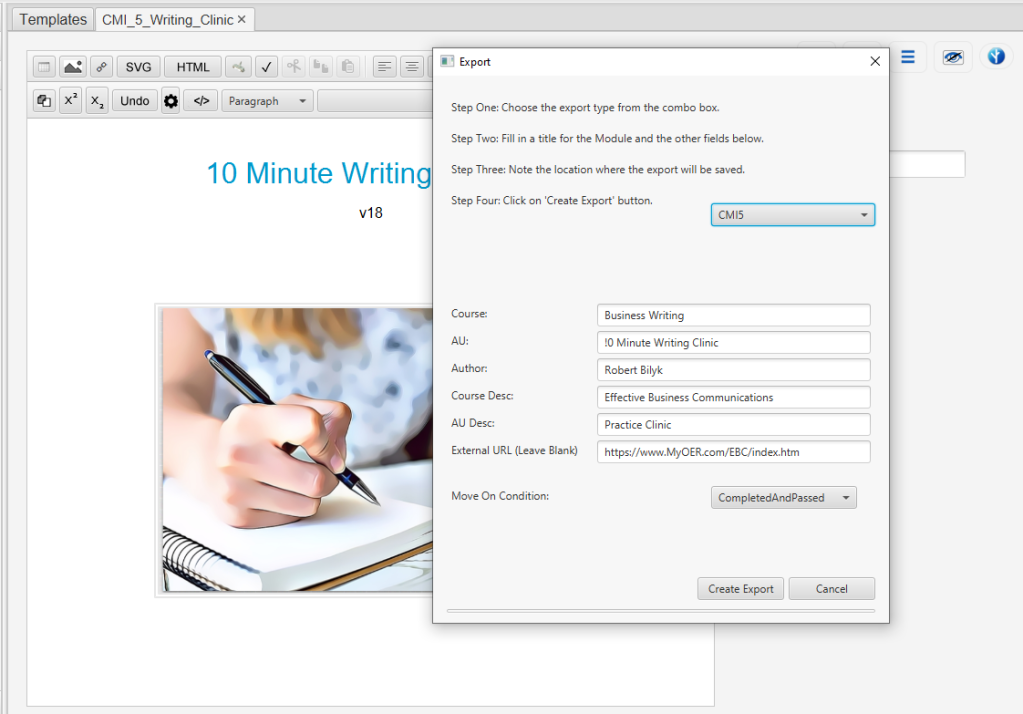

A second benefit applies to those whose institution does not support an LTI connection between H5P.com and their learning management system. As of this writing, H5P does not support SCORM. With LodeStar, an author can import an H5P activity and export the entire package in any flavor of SCORM as well as CMI5.

Lastly, a LodeStar activity with imported H5P can be placed in a repository and made freely available to Open Education Resource libraries without the dependency on H5P.com.

Conclusion

LodeStar’s import and playback of H5P activities expands LodeStar’s repertoire of activities. As importantly, authors benefit from placing H5P activities in LodeStar’s rich framework enhanced by page types, widgets, LodeStar script, media, animation, themes, designs, characters, backgrounds and more.

We look forward to future collaborations and further exploring how LodeStar Learning can leverage the openness of H5P for the benefit of students.

Appendix 1

Here are the two typical scoring scenarios involving an activity with 5 questions and a ‘Finished button”:

Scenario 1:

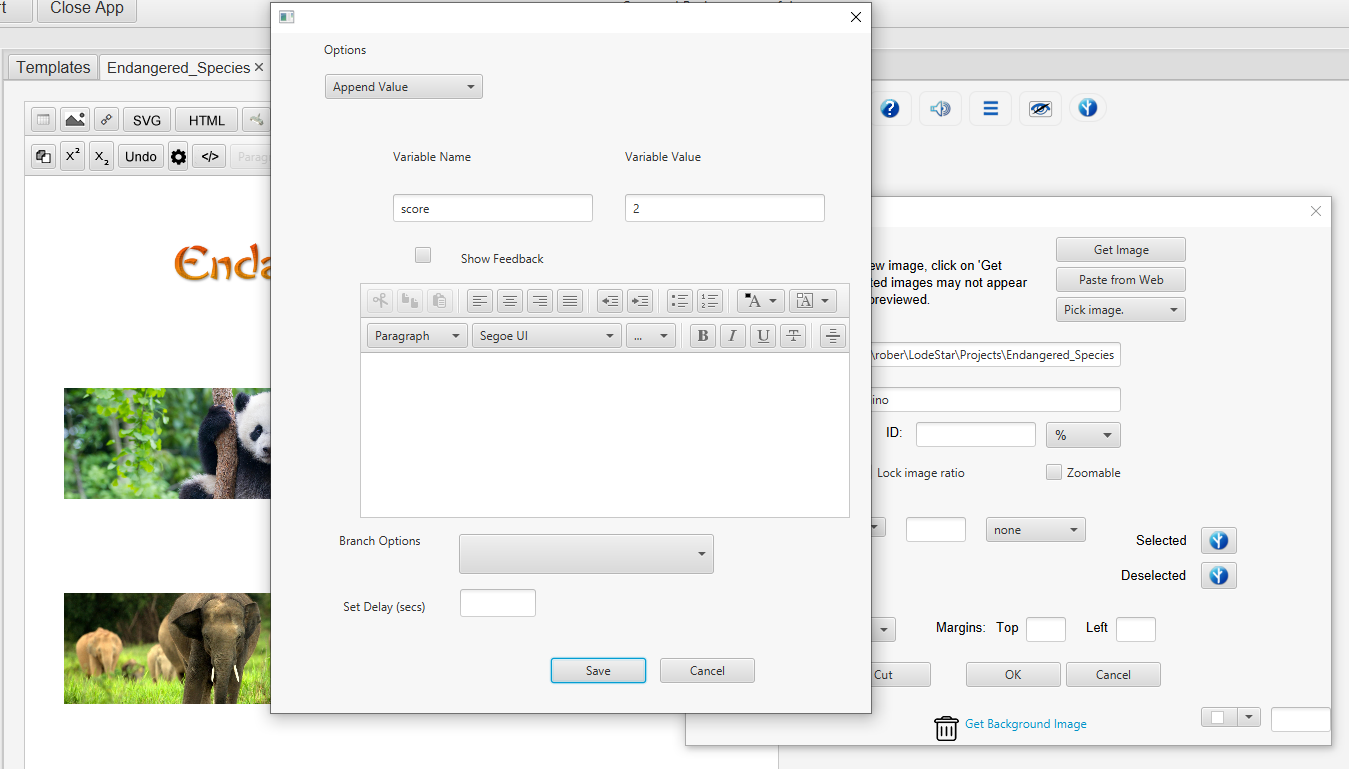

(In LodeStar the verb and scoring type are set to Answered – Raw)

Total Points is set to 5.

If the learner answered all questions correctly, except for question 3, this is what LodeStar would record.

Question 1 — 1 point ✓

Question 2 – 1 point ✓

Question 3 – 0 points ❌

Question 4 – 1 point ✓

Question 5 – 1 point ✓

Finished – 0 points (finished generally sends an xAPI statement with the ‘completed’ verb. ‘Completed’ does not match ‘answered’, which is what LodeStar is listening for.)

Score is 4/5

Scenario 2:

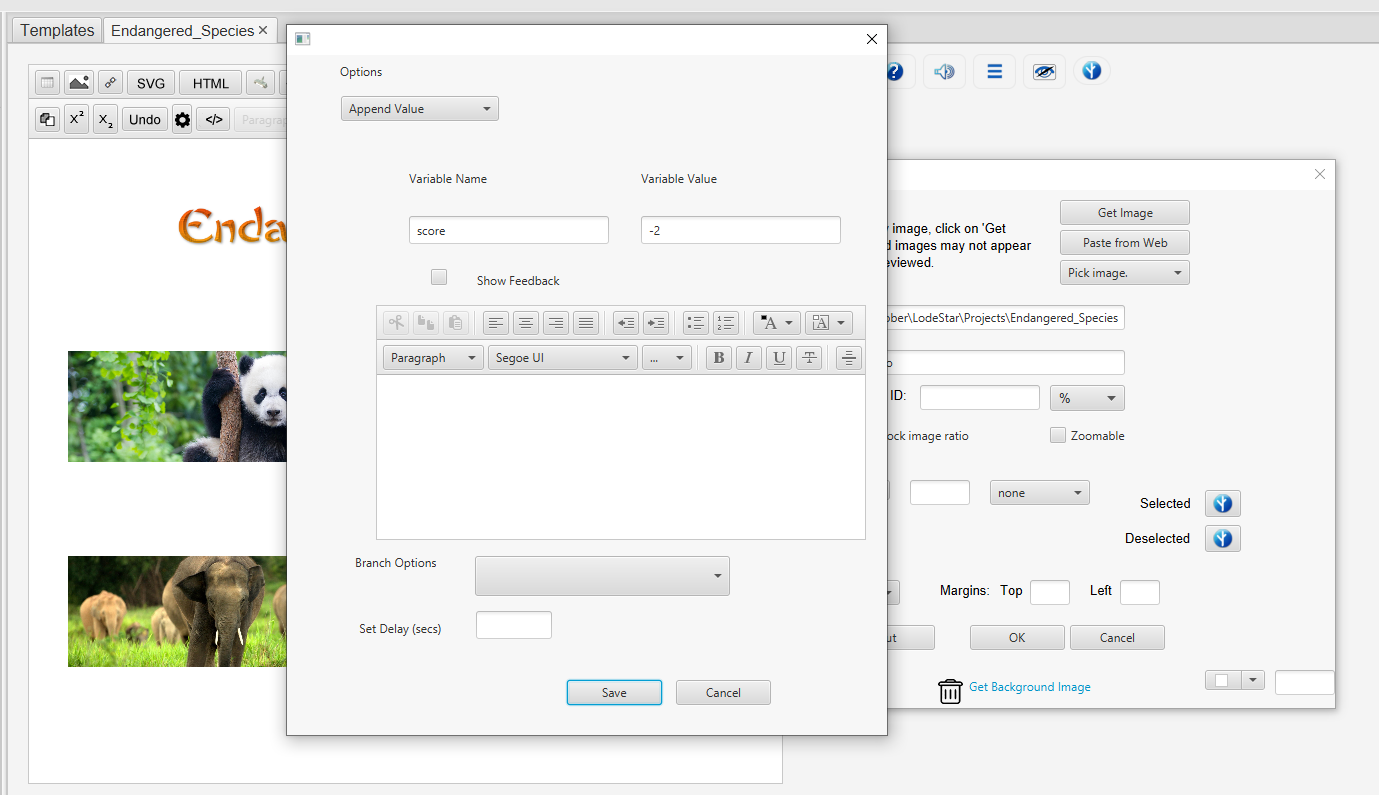

(In LodeStar the verb and scoring type are set to Completed – Scaled)

Total Points is set to 10.

If the learner answered all questions correctly, except for question 3, this is what LodeStar would record.

Question 1 — 0 point ✓ (Individual items generally send an xAPI statement with the ‘answered verb. ‘Answered does not match ‘completed, which what LodeStar is listening for)

Question 2 – 0 point ✓

Question 3 – 0 point ❌

Question 4 – 0 point ✓

Question 5 – 0 point ✓

Finished – 4 points (finished generally sends an xAPI statement with the ‘completed’ verb. ‘Completed’ does match the ‘completed’ setting in the LodeStar scoring method.)

4 points out of 5 is a scaled score of .8 . .8 multiplied by 10 total points is 8.

Score is 8/10

Reach out to supportteam@LodeStarLearning.com with any questions.